The massive deployment of artificial intelligence solutions, with complex operation and relying on large volumes of data in companies, poses unique risks to the protection of personal data. More than ever, it appears necessary for companies to review their tools to meet the new challenges associated with AI solutions that would process personal data. The PIA (Privacy Impact Assessment) is proposed as a key tool for DPOs in identifying risks related to the processing of personal data and in implementing appropriate remediation measures. It is also a crucial decision-making tool to meet regulatory requirements.

In this article, we will detail the impacts of AI on the compliance of processing with major regulatory principles and on the security of treatments which new risks are weighed. We will then share our vision of a PIA tool adapted to answer questions and challenges reworked by the arrival of AI in the processing of personal data.

The impact of AI on data protection principles

Although AI has been developing rapidly since the arrival of generative AI, it is not new in businesses. What is new is the efficiency gains of the solutions, the offer of which is more extensive than ever, and especially in the multiplication of use cases that are transforming our activities and our relationship to work.

These gains are not without risks on fundamental freedoms and more particularly on the right to privacy. Indeed, AI systems require massive amounts of data to function effectively, and these databases often contain personal information. These large volumes of data are subsequently subject to multiple calculations, analyses and complex transformations: the data ingested by the AI model becomes from this moment inseparable from the AI solution [1]. In addition to this specificity, we can mention the complexity of these solutions which reduces the transparency and traceability of the actions carried out by them. Thus, from these different characteristics of AI, results in a multitude of impacts on the ability of companies to comply with regulatory requirements regarding the protection of personal data.

Figure 1: examples of impacts on data protection principles.

In addition to Figure 1, three principles can be detailed to illustrate the impacts of AI on data protection as well as the new difficulties that professionals in this field will face:

- Transparency: Ensuring transparency becomes much more complex due to the opacity and complexity of AI models. Machine learning and deep learning algorithms can be “black boxes”, where it is difficult to understand how decisions are made. Professionals are challenged to make these processes understandable and explainable, while ensuring that the information provided to users and regulators is clear and detailed.

- Principle of Accuracy: Applying the principle of accuracy is particularly challenging with AI because of the risks of algorithmic bias. AI models can reproduce or even amplify biases present in training data, leading to inaccurate or unfair decisions. Professionals must therefore not only ensure that the data used is accurate and up-to-date, but also put in place mechanisms to detect and correct algorithmic bias.

- Shelf life: Managing data retention becomes more complex with AI. Training AI models with data creates a dependency between the algorithm and the data used, making it difficult or impossible to dissociate the AI from that data. Today, it is virtually impossible to make an AI “forget” specific information, making compliance with data minimization and retention principles more difficult.

New risks raised by AI

In addition to the impacts on the compliance principles discussed just now, AI also produces significant effects on the security of processing, thus changing approaches to data protection and risk management.

The use of artificial intelligence then highlights 3 types of risks to the security of treatments:

- Traditional risks: Like any technology, the use of artificial intelligence is subject to traditional security risks. These risks include, for example, vulnerabilities in infrastructure, processes, people and equipment. Whether it is traditional systems or AI-based solutions, vulnerabilities in data security and access management persist. Human error, hardware failure, system misconfigurations or insufficiently secured processes remain constant concerns, regardless of technological innovation.

- Amplified risks: Using AI can also exacerbate existing risks. For example, using a large language model, such as Copilot, to assist with everyday tasks can cause problems. By connecting to all your applications, the AI model centralizes all data into a single access point, which significantly increases the risk of data leakage. Similarly, imperfect user identity and rights management will lead to increased risks of malicious acts in the presence of an AI solution capable of accessing and analyzing documents that are illegitimate for the user with singular efficiency.

- Emerging risks: Like the risks related to the duration of storage, it is becoming increasingly difficult to dissociate AI from this training data. This can sometimes make the exercise of certain rights, such as the right to be forgotten, much more difficult, leading to a risk of non-compliance.

A changing regulatory context

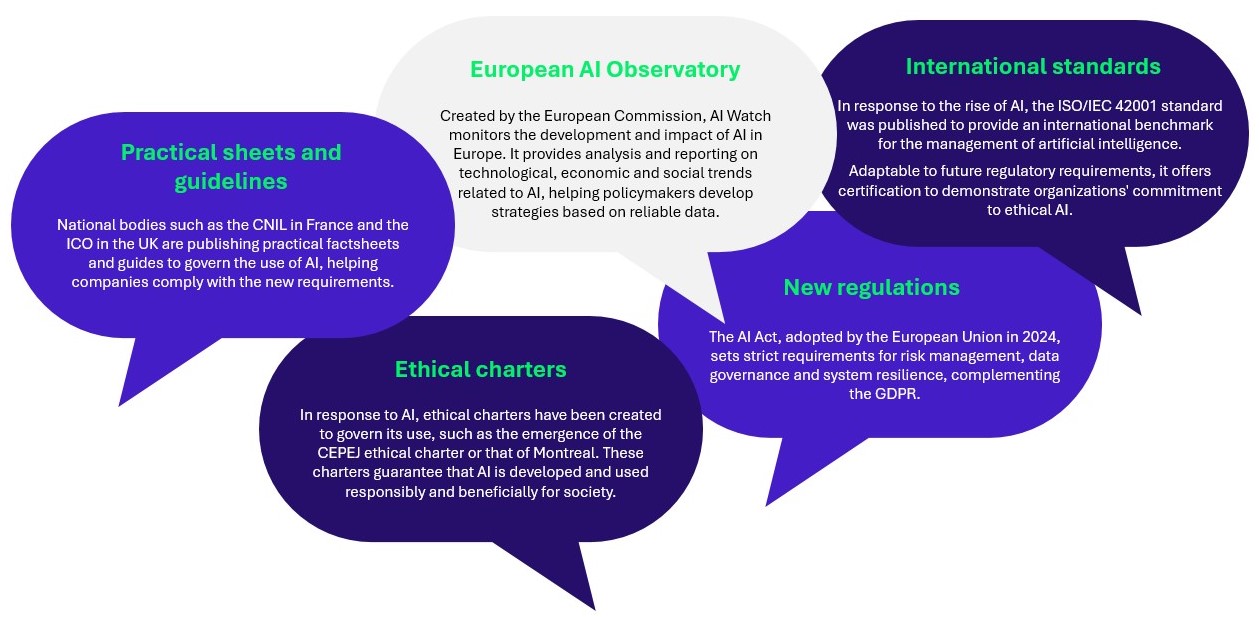

With the global proliferation of AI-powered tools, various players have stepped up their efforts to position themselves in this space. To address the concerns, several initiatives have emerged: the Partnership on AI brings together tech giants like Amazon, Google, and Microsoft to promote open and inclusive research on AI, while the UN organizes the AI for Good Global Summit to explore AI for the Sustainable Development Goals. These initiatives are just a few examples among many others aimed at framing and guiding the use of AI, thus ensuring a responsible and beneficial approach to this technology.

Figure 2: examples of initiatives related to the development of AI.

The most recent and impactful change is the adoption of the AI Act (or RIA, European regulation on AI), which introduces a new requirement in the identification of personal data processing that must benefit from particular care: in addition to the classic criteria of the G29 guidelines, the use of high-risk AI will systematically require the performance of a PIA. As a reminder, the PIA is an assessment that aims to identify, evaluate and mitigate the risks that certain data processing operations may pose to the privacy of individuals, in particular when they involve sensitive data or complex processes. Thus, the use of an AI system will always require the performance of a PIA.

This new legislation completes the European regulatory arsenal to supervise technological players and solutions, it complements the GDPR, the Data Act, the DSA or the DMA. Although the main objective of the AI Act is to promote ethical and trustworthy use of AI, it shares many similarities with the GDPR and strengthens existing requirements. For example, we can cite the reinforced transparency requirements or the mandatory implementation of human supervision for AI systems, supporting the GDPR’s right to human intervention.

A necessary adaptation of tools and methods

In this evolving context where AI and regulations continue to develop, regulatory monitoring and the adaptation of practices by the various stakeholders are essential. This step is crucial to understand and adapt to the new risks related to the use of AI, by integrating these developments effectively into your AI projects.

In order to address the new risks induced by the use of AI, it becomes necessary to adapt our tools, methods and practices in order to respond effectively to these challenges. Many changes must be taken into account, such as:

- improving the processes for exercising rights;

- the integration of an adapted Privacy By Design methodology;

- upgrading the information provided to users;

- or the evolution of PIA methodologies.

In the rest of this article, we will illustrate this last need in terms of PIA using the new internal PIA² tool designed by Wavestone and born from the combination of its privacy and artificial intelligence expertise and fueled by numerous field feedback. The tool’s objective is to guarantee optimal management of risks to the rights and freedoms of individuals linked to the use of artificial intelligence by offering a methodological tool capable of finely identifying the risks on the latter.

A new PIA tool for better control of Privacy risks arising from AI

Carrying out a PIA on AI projects requires more in-depth expertise than that required for a traditional project, with multiple and complex questions related to the specificities of AI systems. In addition to these control points and questions that are added to the tool, the entire methodology for implementing the PIA is adapted within Wavestone’s PIA².

As an illustration, stakeholder workshops are expanding to new players such as data scientists, AI experts, ethics officers or AI solution providers. Mechanically, the complexity of data processing based on AI solutions therefore requires more workshops and a longer implementation time to finely and pragmatically identify the data protection issues of your processing.

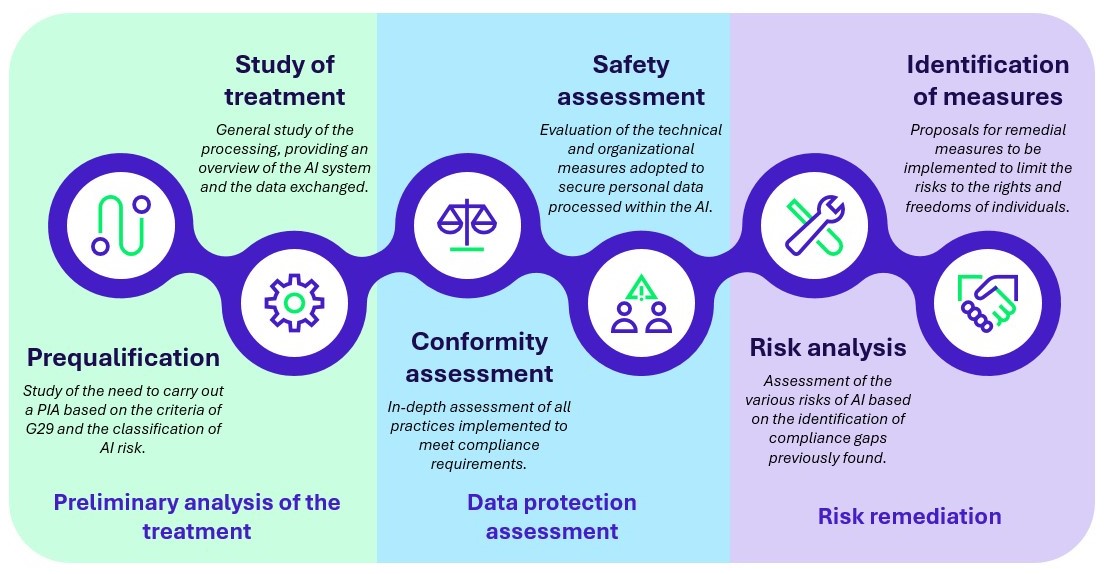

Figure 3: representation of the different stages of PIA².

PIA² strengthens and complements the traditional PIA methodology. The tool designed by Wavestone is thus made up of 3 central steps:

- Preliminary analysis of treatment

To the extent that AI poses risks that may be significant for individuals and in a context where the AI Act requires the implementation of a PIA for high-risk AI solutions processing personal data, the first question a DPO must ask is to identify whether or not they need to carry out such an analysis. Wavestone’s PIA² tool therefore begins with an analysis of the traditional G29 criteria requiring the implementation of a PIA and is then supplemented with questions associated with identifying the level of risk of the AI. The analysis is traditionally completed with a general study of the processing. This study, supplemented with specific knowledge points on the AI solution, its operation and its use case, serves as a foundation for the entire project (note that the AI Act also requires that such information be present in the PIA relating to high-risk AI). At the end of this study, the DPO has an overview of the personal data processed, how the personal data circulates within the system and the different stakeholders.

- Data protection assessment

The compliance assessment then allows to examine the organization’s compliance with the applicable data protection regulations. The objective is to examine in depth all the practices implemented in relation to the legal requirements, while identifying the gaps to be filled. This assessment focuses on the technical and organizational measures adopted to comply with the regulations and secure personal data within an AI system. This part of the tool has been specially developed to meet the new issues and challenges of AI in terms of compliance and security, taking into account the new constraints and standards imposed on AI systems. This assessment includes both classic control points of a PIA and those from the GDPR and is supplemented by specific questions associated with AI which have benefited from the field feedback observed by our AI experts.

- Risk remediation

After having listed the state of the project’s compliance and identified the gaps present, it is possible to assess the potential impacts on the rights and freedoms of the persons concerned by the processing. An in-depth study of the impact of AI on the various compliance and security elements was carried out to feed this PIA² tool. This approach, operated by Wavestone, although optional, allowed us to gain an ease of carrying out the PIA by allowing automation of our PIA² tool. This tool automatically proposes specific risks linked to the use of AI within the processing, according to the answers filled in parts 1 and 2. Once the risks have been identified, it is then necessary to carry out their traditional rating by assessing their likelihood and their impacts.

Still with this automation in mind, Wavestone’s PIA tool also automatically identifies and proposes corrective measures adapted to the risks detected. Some examples: solutions such as the Federated Learning, Homomorphic encryption (which allows encrypted data to be processed without decrypting it) and the implementation of filters on inputs and outputs can be suggested to mitigate the identified risks. These measures help to strengthen the security and compliance of AI systems, thus ensuring better protection of the rights and freedoms of the data subjects.

Once these three major steps have been taken, it will be necessary to validate the results and implement concrete actions to guarantee compliance and the risks linked to AI.

Thus, when a treatment involves AI, risk reduction becomes even more complex. Constant monitoring of the subject and support from experts in the field become essential. At present, many unknowns remain, as evidenced by the position of certain organizations still in the study phase or the positions of regulators that remain to be clarified.

To better understand and manage these challenges, it becomes essential to adopt a collaborative approach between different expertise. At Wavestone, our expertise in artificial intelligence and data protection has had to cooperate closely to identify and respond to these major issues. Our work analyzing AI solutions, new related regulations and data protection risks has clearly highlighted the importance for DPOs to benefit from increasingly multidisciplinary expertise.

Acknowledgements

We would like to thank Gaëtan FERNANDES for his contribution to this article.

Notes

[1]: Although experiments aim to offer a form of reversibility and the possibility of removing data from AI, such as machine unlearning, these techniques remain fairly unreliable today.