Artificial intelligence (AI) is transforming numerous sectors, including the industrial sector. The latest advancements, particularly those based on Machine Learning (ML) like generative AI, are paving the way for new opportunities in process automation, supply chain optimization, personalization, and so on. These innovations enable companies to increase efficiency, reduce costs, enhance user experience, and foster innovative competitiveness.

However, this evolution highlights specific cybersecurity challenges associated with these systems, prompting industrial companies to consider how to secure these applications.

What opportunities does Artificial Intelligence bring? And what are the potential cybersecurity risks that come with it?

AI & Industry

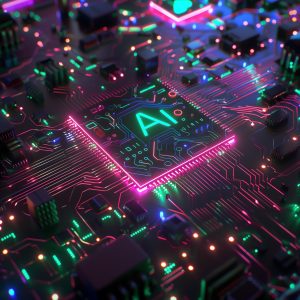

To better understand the range of possibilities offered by these technologies, Wavestone has created the 2024 Generative AI Use Case Radar for Operations. This radar lists the usage trends observed among its industrial clients, as well as other potential use cases that may develop in the coming years:

Figure 1 – Generative AI use cases Radar for Operations

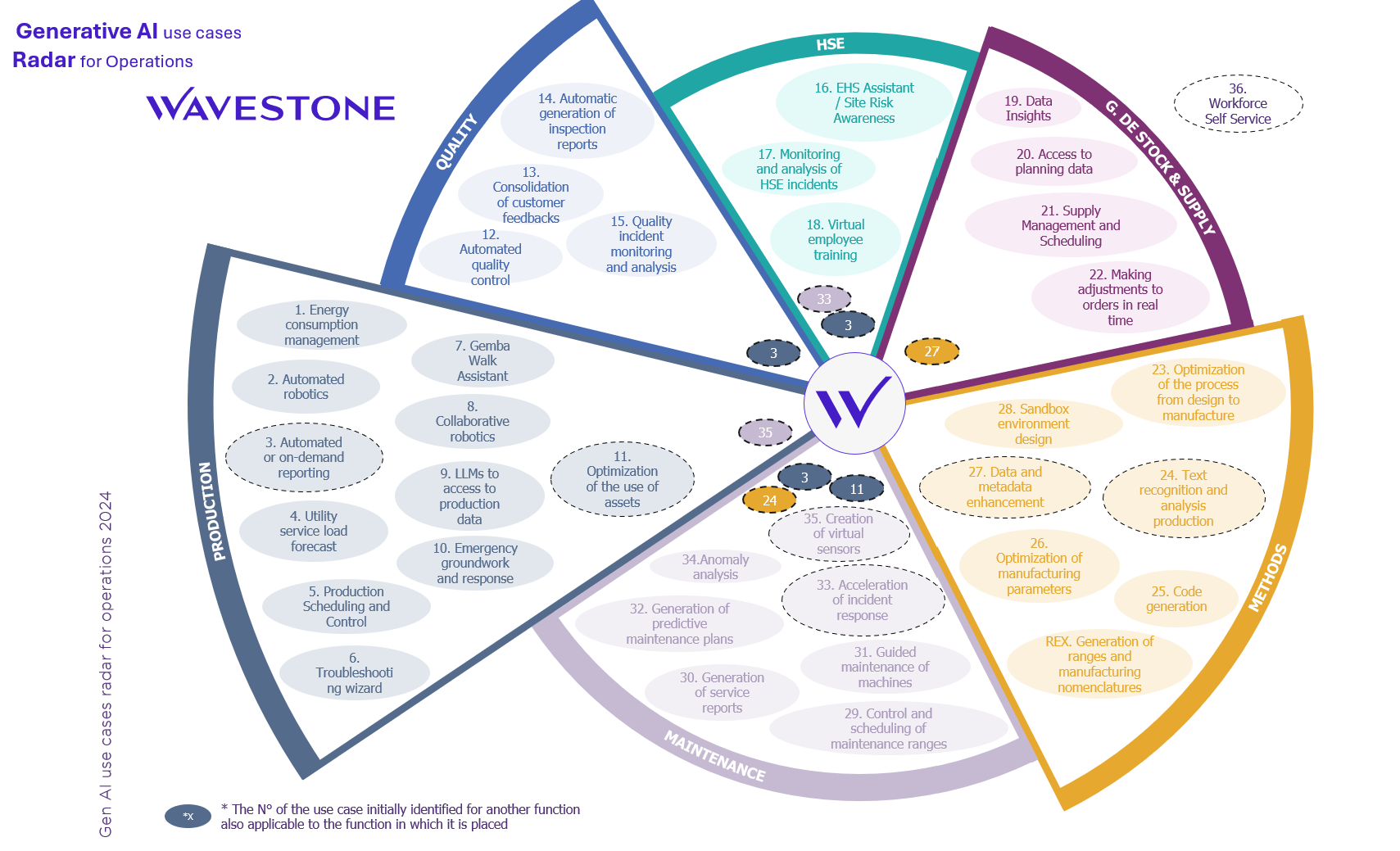

Wavestone has identified four types of use cases (decision support, tool and process improvement, document generation, and task assistance) that impact various industrial functions (production, quality, maintenance, inventory management, supply chain, etc.).

Figure 2 – Main uses of generative AI in industrial operations

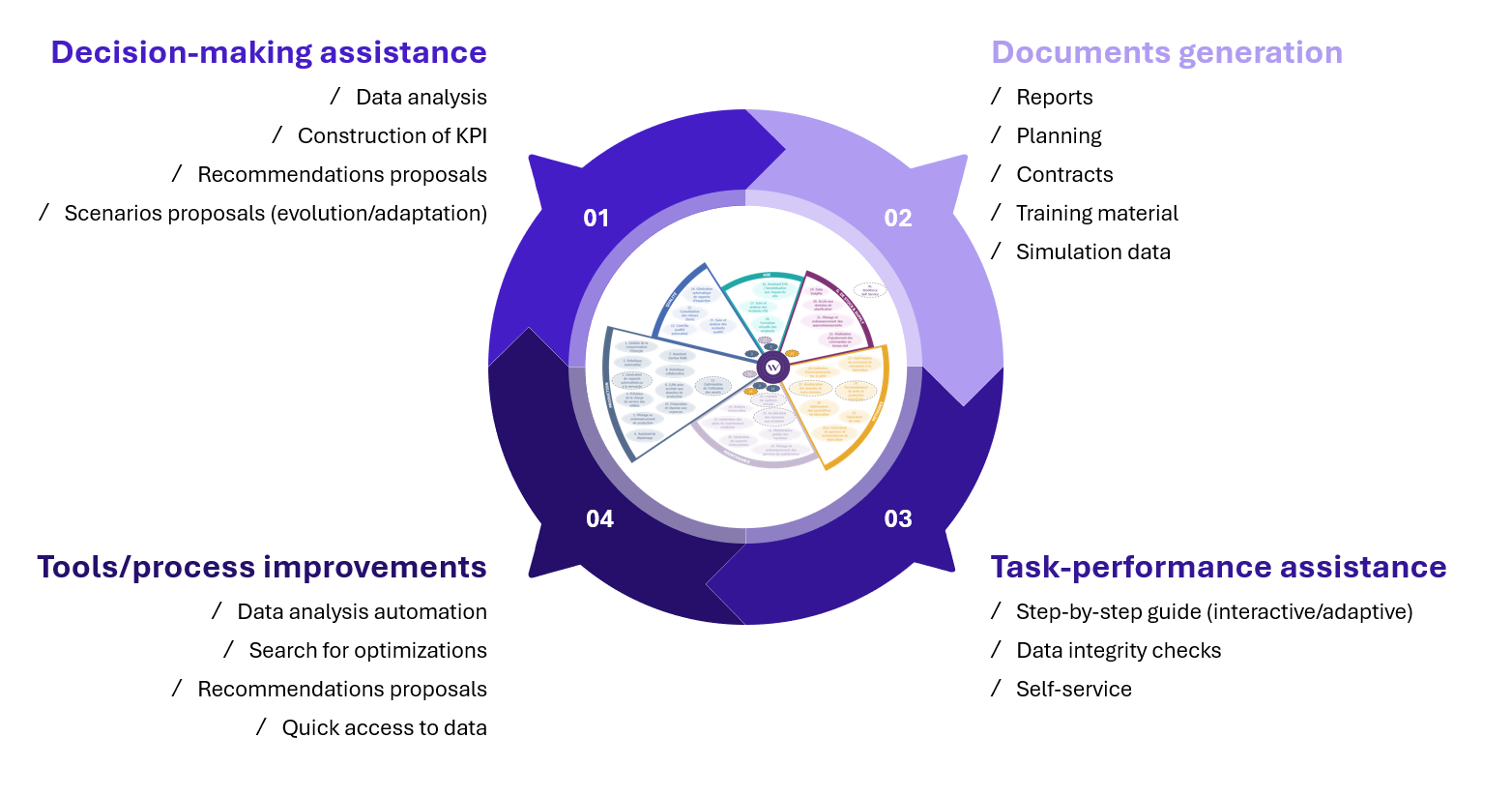

Here are some concrete examples illustrating how these technologies integrate into the operations of various sectors, what they bring, and the potential impacts of cyberattacks on these systems:

Figure 3 – Real AI use cases in industrial sector

Figure 3 – Real AI use cases in industrial sector

These systems provide significant technological and strategic advantages, as well as considerable financial or time savings.

However, integrating these technologies can also introduce new risks that companies must consider.

AI Cyber Risks

How can an attacker compromise these systems?

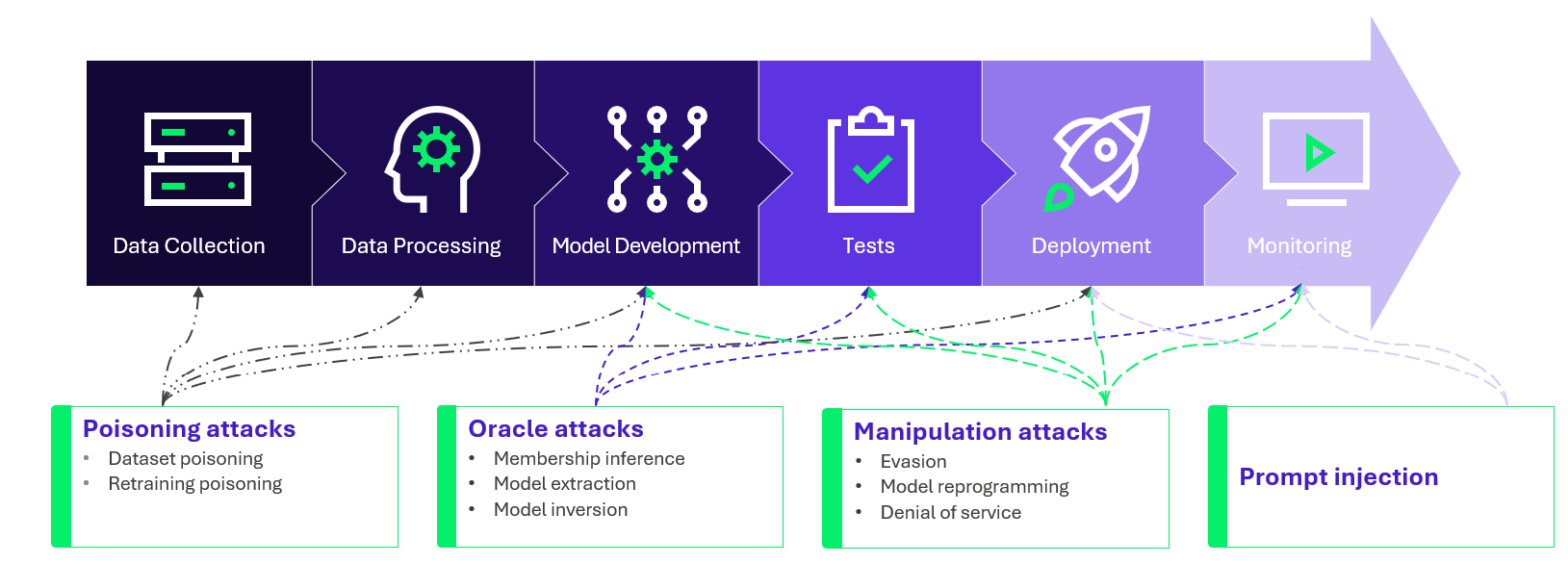

There are several categories of AI-specific attacks, all exploiting vulnerabilities present in different phases of these models’ lifecycle, providing a broad attack surface:

Figure 4 – AI lifecycle: possible attacks

Most of these attacks aim to divert AI from its intended use. The objectives can include extracting confidential information or making the AI perform unauthorized actions, thereby compromising the security and integrity of the systems.

To understand these attacks in detail, Wavestone’s experts have illustrated evasion and oracle methods in this dedicated article.

What is the situation regarding these risks for industrial companies?

As it stands, the risks associated with AI in the industry vary greatly depending on the sector and its application.

To carry out oracle, manipulation, and prompt injection attacks against an AI system, being able to interact with it by providing input data is crucial. This is feasible with some generative AIs, like ChatGPT, which require a user input to start operating. Conversely, other systems, such as those used for predictive maintenance (AI based solutions that anticipate and prevent equipment failures), do not rely on human instructions to function, making interactions more complex. Moreover, the types of input data for these systems are often very specific, hard to obtain, and manipulate.

Data poisoning attacks could be an alternative, as this method does not require interacting with the AI system. However, this would first require infiltrating the information system to gain access to the AI, deeply understanding its architecture, and then attempting to alter its behavior- with no guarantee of success. Moreover, companies with a good level of cybersecurity already have countermeasures and protection methods in place which significantly reduces the chances of such an attack succeeding.

Comparatively, other methods that do not specifically target the AI system can be easier to implement and may provide an attacker with a greater opportunity to cause harm to a company.

However, some AI applications, like generative AI assistants, are vulnerable to input-based attacks mentioned above.

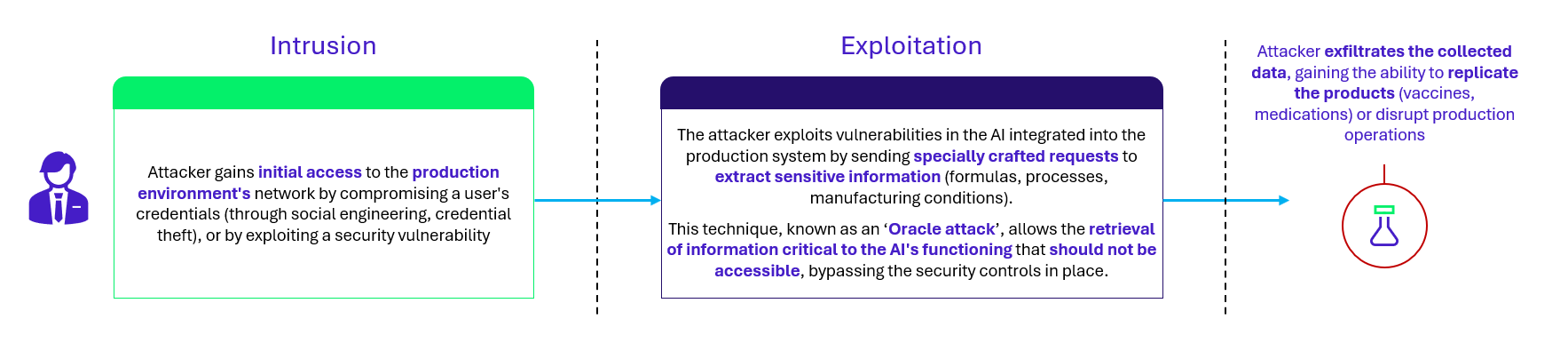

Here is an example of an attack scenario on the vaccine production assistant shown in Figure 3.

Context of the use-case

Employees write their request to the assistant, attaching the specifications of the vaccine to be produced. The assistant runs the analysis and, using a RAG module (which provides the AI with additional data without retraining), cross-references this information with the company’s database. Finally, the assistant returns a machine instruction file to employees, which they can use directly to launch production.

Attack scenario

Figure 5 – Attack scenario killchain on vaccine production assistant

Figure 5 – Attack scenario killchain on vaccine production assistant

The consequences of a theft of trade secrets such as this could include the resale of this information to competitors or its public disclosure, which could have significant financial and reputational implications. However, conventional access management security measures can help to reduce the risk of this type of attack.

Finally, although some AI applications are vulnerable to new attacks, specific security measures tailored to the weaknesses of each system ensure effective protection.

So, what are the points to remember?

After all, the risks associated with AI technologies for industrial companies are not fundamentally new.

Although some AI systems are vulnerable to new attacks, the cybersecurity principles for protecting against them and limiting their impact remain unchanged.

It therefore remains essential to adopt a risk-based approach and integrate cybersecurity by design for any AI application.