Among the technologies that seemed like science fiction only a few decades ago and are now an integral part of the digital ecosystem, Facial Recognition (FR) holds a prominent place. Indeed, this tool is increasingly present in our daily lives: unlocking our phones, customs gates at airports, authentication for payment systems, automated sorting of our photos, and even person search.

Demystification of operation

These technologies aim to identify and extract faces from images or video streams to calculate a facial imprint, encapsulating all of their features, in order to facilitate a subsequent search and identification.

The idea of using the face as a form of identification in systems, as well as the earliest functional systems, dates back to the early 1960s with the Woodrow Wilson Bledsoe System (1964). The Woodrow Wilson Bledsoe System was capable of recognizing faces by analyzing digitized photos. The system’s approach relied on identifying facial features such as the distance between the eyes and the width of the nose.

The latest advancements in artificial intelligence, particularly with the advent of Machine Learning and the explosion of shared photos and videos on the internet, have allowed for rapid and widespread development of facial recognition algorithms.

In practice, these systems will rely on the images captured by our smartphones and cameras, which consist of a grid of pixels, each carrying the values of the three colors: red, green, and blue for the respective pixel. Unlike human vision, the FR system will perceive these images in a completely digital form. The algorithm of RF will typically follow steps for processing:

- Capturing the image: It all begins with capturing an image containing a face. This image can come from a photo taken by a camera or be extracted from a video.

- Face detection: The algorithm will analyze the image to detect the presence and position of faces. To do this, it will use image processing techniques to search for patterns and characteristic features of faces, such as contours, structural elements (like eyes), and variations in brightness.

- Extraction of facial features from the person: Once the face is detected, the algorithm extracts specific characteristics that will allow it to distinguish it from other faces. These characteristics include intelligible elements (eye position, overall shape, etc) as well as elements intelligible only to the AI model (gradients and specific pixel arrangements).

- Creation of a facial imprint: Based on the extracted features, the algorithm creates a facial imprint, which is essentially a summary of the face, in a digital format understandable for the model.

- Comparison with the database: In order to perform identifications and searches, the obtained facial imprint can be compared with fingerprint or image databases. The matches found will generally indicate a confidence percentage, based on the calculated level of resemblance.

Nowadays, the underlying mechanics of image processing and machine learning can offer excellent performance in terms of speed and consistency of results. However, like other automated technological services, they can be vulnerable to cyber security threats and may, in some cases, be exploited by an attacker.

Overview of attacks and weaknesses

The objective will not be to enumerate all potential attacks on machine learning systems, but to focus on attacks that can target RF algorithms. The main typologies are as follows:

Adversary attacks:

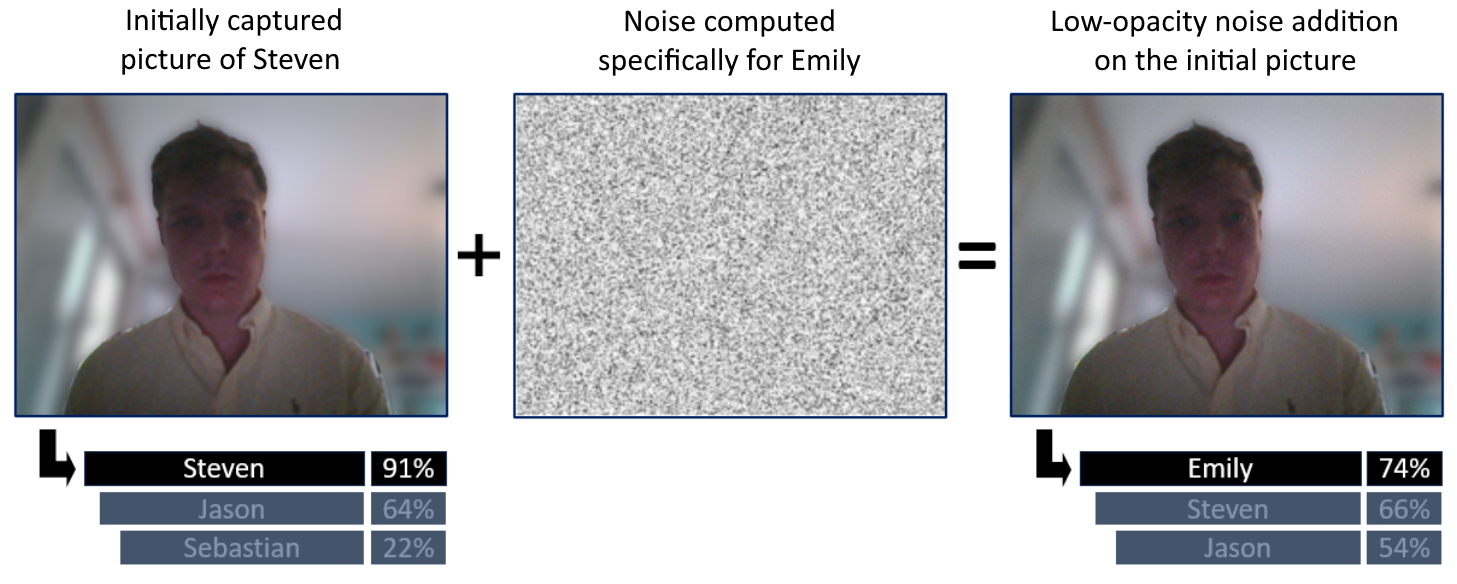

The first cracks in the armor of FR algorithms, discovered in the 2010s, involve subtly introducing very slight noise into the images sent to the system. This alteration, nearly invisible to a human, can disrupt the fine features perceived by the model and intentionally lead to errors in understanding and classification by the underlying neural network. If an attacker can alter the sent images, someone with good knowledge of the system could potentially impersonate a user.

Example of adversary attack

Occlusion attacks

Since 2015, researchers have been able to put into practice attacks where occlusion of parts of the face, such as wearing glasses or masks, can deceive certain FR models. Indeed, the model may fail to detect and extract faces from captured images, or extract inconsistent features. In both cases, such attacks allow for subject anonymization.

Examples of occlusion technique

Face substitution attacks

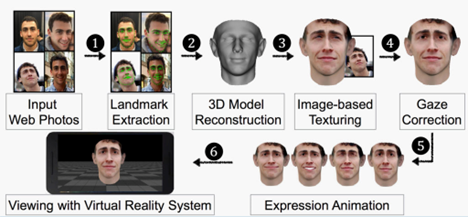

Like spy movies, researchers have explored face substitution attacks, using sophisticated techniques to deceive systems by presenting artificial faces that resemble real ones. These techniques can range from simple cardboard masks to custom-made silicone masks replicating a person’s face and details. These attacks have raised concerns about the reliability of facial recognition systems in real-world scenarios.

Note that some facial recognition systems (such as Microsoft’s Windows Hello) rely on infrared cameras to ensure they are facing a genuine face.

Procedure for creating a face for a face substitution attack

Superposition attacks

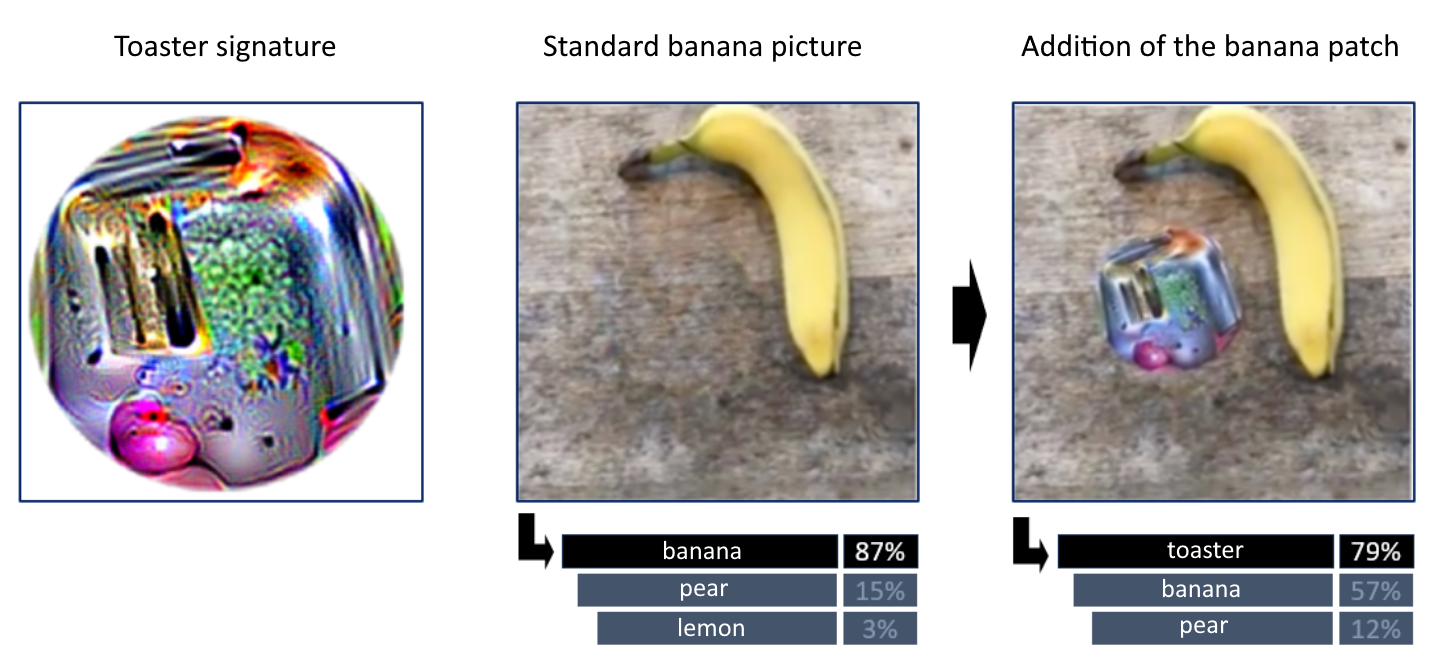

In some cases, simply overlaying a patch on another image can mislead FR algorithms. It is possible to calculate the image that best represents a person or object (in our case, a toaster) from the model’s perspective, and insert this element into the image we want to manipulate. The FR model will tend to focus on this area, potentially completely altering its predictions.

Example of a superposition attack

Illumination attacks

By playing with the surrounding lighting, it is common to be able to alter the performance of a FA algorithm, highlighting the need to take environmental conditions into account.

Tomorrow, a defense that is equal to the risks

Faced with these fallible systems, a whole set of protection strategies appear, generally focusing on verifying the consistency and veracity of the images presented. A brief overview of the areas of work for the defense:

- Blinking: Blinking can be used as a defense mechanism to verify the authenticity of faces in real-time, as blinking is hard to reproduce and natural way on an image or video. Based on natural blink patterns, facial recognition systems can detect fraud attempts and enhance the security of biometric identification.

- Gait analysis: Gait analysis provides an additional layer of defense by checking the consistency between the claimed identity and the way a person walks. This method can help prevent attacks based on imposters or fakes by detecting irregularities in the way a person moves, increasing the security of facial recognition systems.

- Dynamic facial features: By using dynamic facial features, such as muscle movements and blinking, face alertness analysis helps distinguish real faces from fakes, preventing attacks based on pre-recorded images or videos. This technique enhances the security of biometric authentication by ensuring that the faces submitted for recognition are alive and live.

- Full 3D scan: Full 3D scanning captures the three-dimensional details of the face, providing a more accurate representation that is difficult to counterfeit. Using this technique, facial recognition systems can detect fraud attempts by masks or facial sculptures, enhancing the security of biometric identification.

- Trusted complementary biometric techniques: By combining multiple biometric modalities such as facial recognition, fingerprint, and voice recognition, facial recognition systems can benefit from multiple layers of defense. This approach enhances security by reducing the risk of recognition errors and bypass, providing more robust and reliable biometric identification.

Conclusion

Due to their “black box” design, AI-based systems, with more recently generative AI, are currently fallible. New types and techniques of attack are emerging, as are defence technologies.

In the case of facial recognition, it can expose its users to obvious risks of identity theft, with a pro/personal permeability, like any biometric authentication, unlike a simple password.

With the democratization of “deepfake” technologies, and the erosion of our trust in images, an effort to secure these systems must be ensured, commensurate with the great responsibility that can be given to them.