Step 0: context and objectives

Wavegame is a Wavestone inter-school challenge designed to promote cybersecurity expertise and the consulting profession created in 2019. In its 2023 edition, 33 teams competed in a hands-on exercise focused on securing an AWS Infrastructure.

The challenge was split into 2 tracks, the first being dedicated to Business majors and the later for Computer Science majors. The technical track consisted of 2 qualifying exercises and a final event. The second exercise is the topic of this article.

As part of a futuristic scenario, the students, acting as consultants, are hired by France Fusion, a company operating the country’s first nuclear fusion plants. France Fusion is developing a Cloud-based monitoring platform to analyze data from its proprietary industrial equipment. For this purpose, it uses an ElasticSearch database.

Initially started independently as a Proof of Concept (PoC), detached from the security department, a team of developers succeeded in deploying a functional architecture on AWS. The students are then tasked with strengthening the infrastructure’s security, in accordance with France Fusion’s Public Cloud policies.

The technical challenge was significant: deploying a self-service, vulnerable infrastructure across 33 AWS accounts. This also meant granting students full admin access and necessary permissions to make direct modifications from the AWS console, all within limited budget considering the number of participants. In this article, we will share the recipe that made this challenge a reality.

Step 1: draw up an architecture with an educational dimension

Before delving into the development of the coding game, we had to keep in mind 4 constraints:

- Target Audience: as our participants are students with heterogeneous backgrounds in the Cloud, it is important to take this diversity into account. Therefore, we focused on using essential AWS services only (e.g., S3, EC2, Lambda), because such resources are well-documented, and students may already have used them in class or as part of personal projects.

- Theme: our objective was to create an architecture similar to a client environment. The immersion and realism of the interface was a key to student engagement. We therefore opted for an ELK stack on an EC2 instance as it seemed appropriate for a proof-of-concept with a monitoring dimension.

- Costs: as the infrastructure would be available to students for two weeks and replicated across 33 AWS accounts, it was in our interest to optimize costs. To achieve this, we used AWS Pricing Calculator to estimate costs, and opted for a low-cost region and built the infrastrucure around pay-as-you-go services such as Lambda functions.

- Deadlines: to cope with a tight schedule, we have defined objectives and deadlines with enough margin to overcome any technical constraints. The main stages of the project include development, testing, account creation and deployment.

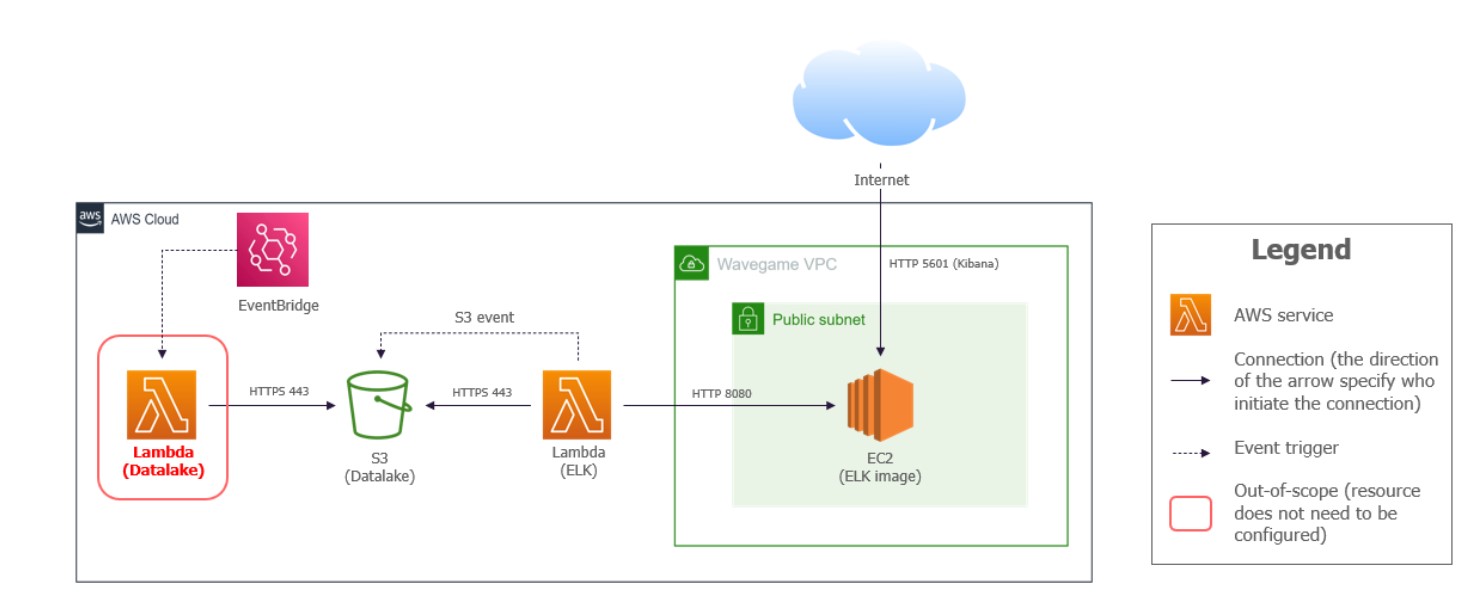

Considering the project constraints, we have sketched the “vulnerable” architecture schema outlined below (Figure 1). The industrial equipment is simulated by a Lambda (Datalake) which generates logs and sends them to an S3 bucket (Datalake). A second Lambda (ELK) is then triggered by an S3 notification. It will retrieve the log file and send it to the ElasticSearch database (Docker image within an EC2 instance). Finally, the Kibana interface is accessible from Internet for visualization and log analysis.

Figure 1 : initial architecture diagram of the Wavegame 2023

Step 2: picture a secure architecture based on Public Cloud policies

Now that we have our initial infrastructure, the next step is to design Public Cloud policies that will define the security requirements and provide evaluation criteria. To achieve this, we have fine-tuned security best practices implemented by clients, here are some examples:

- AWS-01: All AWS resources must use IAM roles that are specific to their needs and that respect the principle of least privilege.

- AWS-02: All AWS resources must be connected and/or attached to a VPC.

- AWS-03: EC2 instances must not be publicly accessible through Internet.

- AWS-04: All infrastructure logs (AWS Lambda and EC2) generated by AWS services must be sent to CloudWatch.

- AWS-05: Root EBS volume must be encrypted on all EC2 instances.

- AWS-06: Data stored on S3 buckets must be encrypted.

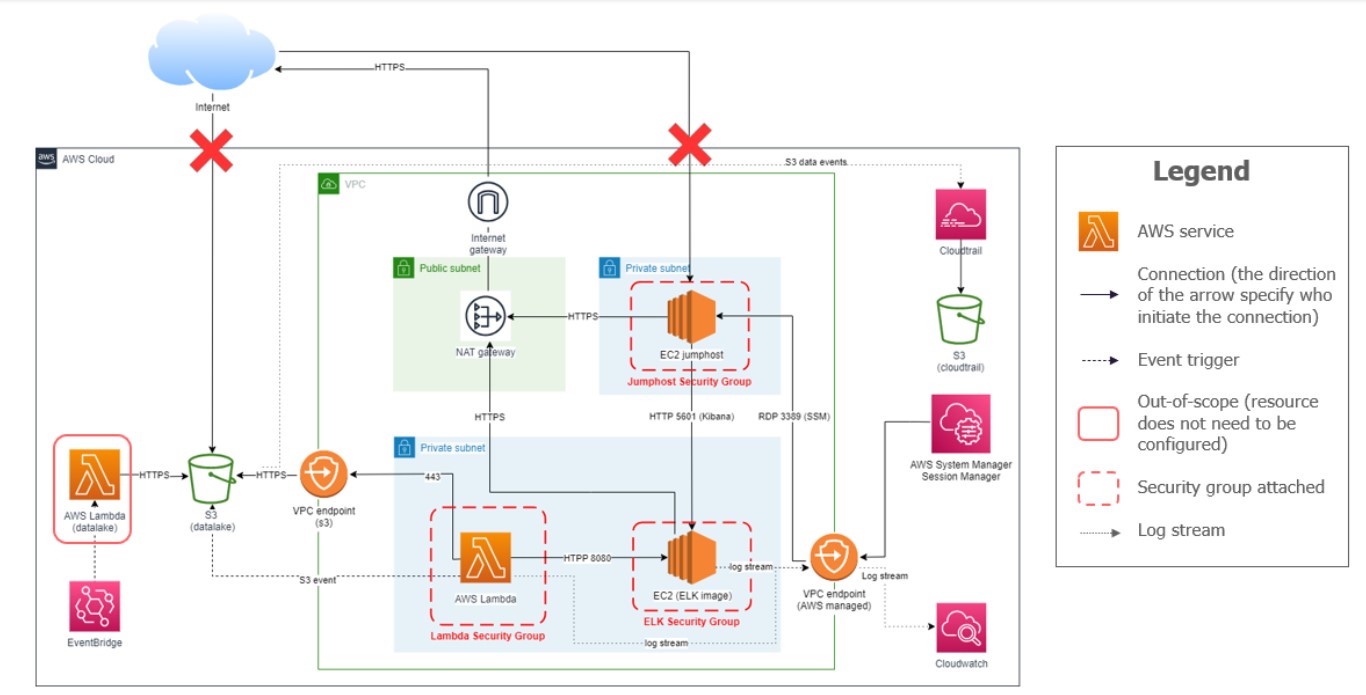

From the Public Cloud policies, we came up with the following secure architecture (Figure 2):

Figure 2: target architecture diagram of the Wavegame 2023

To summarize network design, all compute resources are placed in a private subnet, the S3 bucket is reachable through a VPC endpoint, the ELK monitoring platform is solely accessed through a jump host Virtual Machine (VM). CloudWatch and CloudTrail services are activated for monitoring and supervision purposes. Finally, some security groups are attached to resources to only allow incoming communications that are strictly necessary.

Step 3: move from design to code in Terraform

To build the coding game, we created and maintained 2 distinct architectures, represented by 2 distinct branches in Github. The first one being the vulnerable architecture which will be deployed initially, and the second being the solution that acts as a warranty of feasibility. This “warranty of feasibility” means that 3 mandatory points are met:

- The IAM permissions set are enough to allow the system to work properly.

- The final configuration of the infrastructure must consider objects lifecycles and their interactions.

- The expertise required to complete the coding game must be adapted to students’ skills development over a 2-week challenge period.

Regarding the development lifecycle, rather than following a linear development approach, where we would first create the code for the initial infrastructure, then for the target infrastructure, we opted for an agile approach with the definition of functional blocks. To illustrate this idea, the block “Lambda (ELK) -> S3” aims to design a Lambda that will request an S3 bucket as soon as an S3 PutObject notification is created, with or without a VPC endpoint. Although we must maintain 2 Terraform configurations simultaneously, this approach gives us greater agility in reassessing our technical choices. To further reduce redundancy and ensure maintainability, we focused on developing Terraform modules such as lambda and S3.

To automate the deployment of resources within our sandbox and student accounts, we created a simple CI/CD in Github. It is constituted of 2 Github Actions: one workflow to terraform apply, the other to terraform destroy. In a YAML syntax, Github Actions allow you to execute AWS or Hashicorp built-in tasks.

One advantage of such workflow is that you can store AWS access credentials in Github Secrets instead of source code or local file. Moreover, by storing Terraform states in a S3 bucket it facilitates collaboration. A Terraform state is a file that keeps track of the current configuration. Each developer sets up his S3 key in his Github branch that will become the reference of his state.

As the development progressed, we realized how wide the gap was between the initial and the target architecture. The main reason is that IAM and network logics are very different. As a result, it has become essential to carry out tests under real conditions, i.e. from the AWS console, to identify breaking changes, blocking policies, and to assess complexity. As an example, one of the tests reminded us that the startup script of an EC2 called user-data is not persistent after a reboot. Therefore, this behavior prevented the implementation of the EBS root volume encryption security policy (AWS-05).

Step 4: securely deploy the environments

In the context of the challenge, we were about to grant students privileged access to an environment for two weeks where constant individual monitoring or assistance would not be feasible. While this approach represents a better learning opportunity, it raises specific security scenarios that we must anticipate and mitigate. Among these, budget overrun was a major concern, given the unrestricted access and resources at their disposal. Another significant threat is the potential for students to ascend within the organization, gaining access systems or messing with each other. Lastly, the risk of resource misappropriation for unauthorized or malicious purposes is not negligible. Each of these threats requires careful consideration to ensure a secure and responsible deployment.

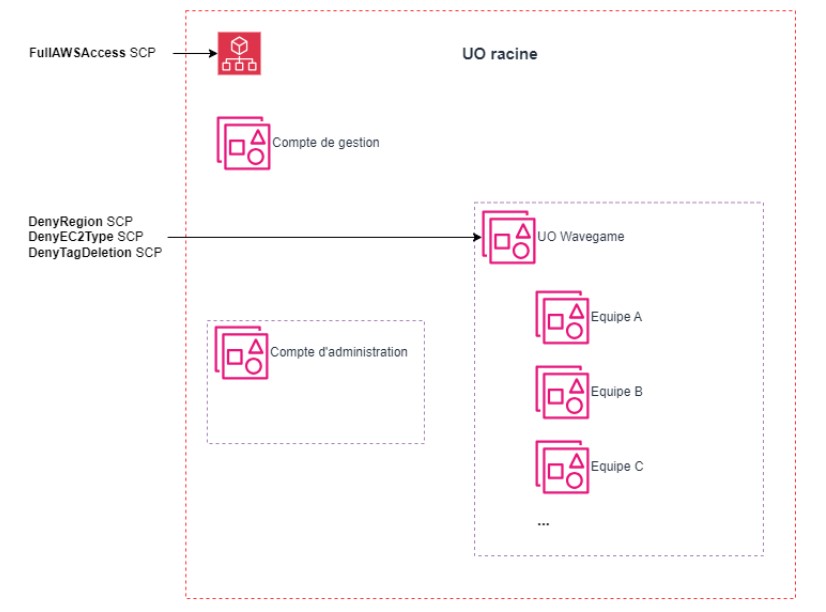

For this coding game, we opted for a multi-account AWS design to isolate each team environments. With AWS Organization, we achieved a simplified administration, improved cost control, and were able to enforce guardrails using Security Control Policies (SCP). In Figure 3, we present our AWS Organization, made of an admin account and an OU Wavegame that hosts the teams accounts where the infrastructure is deployed. We implemented 3 specific SCPs to:

- Restrict access to AWS services outside the designated region, us-east-1, by defining a list of authorized operations.

- Enforce the use of Amazon EC2 instance types t2.micro or t2.large, a constraint related to the ElasticSearch (ELK) environment.

- Deny students accounts from deleting or modifying a resource that has “protected” tag.

Additionally, to better manage costs, especially for a two-week duration, we set up a Lambda function to automatically shut down EC2 instances after two hours of activity. To prevent any unauthorized alterations by the students, this Lambda function was one of the resources secured with the “protected” tag.

Figure 3: AWS Organization of the Wavegame 2023

Finally, in addition to our IAM user being administrator of the OU Wavegame via an assumable IAM role, we created an IAM user with the AdministratorAccess role for each AWS account, to give students autonomy during the challenge. In particular so they have enough rights to create nominative accounts.

Step 5: prepare for the Run and the correction

Once the challenge kicked off, the students had two weeks to secure the resources in their AWS account following the guidelines. With such extensive permissions, major configuration errors can quickly arise. For instance, one group created a “Deny All” S3 policy, which resulted in them locking themselves out, as well as anyone else since none of us had root account privileges.

To address such situations, we set up the following communication system: each team would be assigned a coach responsible for reporting technical issues to the organizing team, which then escalated the incidents to us. We were then able to investigate and fix the issue or be able to quickly communicate with them without receiving too many messages for simple questions that could be answered by the coaches.

Besides incident management, our role also involved monitoring budget overruns. To achieve this, we set up cost alerts for each AWS account. We also developed a script to track the budget evolution of the teams in real-time. This tool proved to be very useful in providing an estimate of the time spent by the students on the challenge and respond in case or abnormal spending. For example, two days after the challenge began, an alert was triggered due to a failure in the Lambda function meant to shut down the VM.

Once the challenge concluded with few incidents, and student access had been revoked, it was time to proceed with the assessment to declare the winners. As a reminder, the students were required to configure their AWS account in compliance with the Public Cloud policies. For their evaluation, we used two grading mechanisms:

- An automatic assessment through the deployment of AWS Config Managed rules at the end of the challenge. Amazon provides a sufficient set of rules to cover a significant percentage of the requirements. For example, a rule checks whether the S3 bucket is encrypted (AWS-06).

- A manual assessment based on clearly documented expected criteria and steps for verification.

To conclude, the organization of a coding game is an ambitious project, requiring strong Cloud and Terraform skills, solid management capabilities and the ability to react to unexpected events. Despite the challenges, this is an outstanding learning opportunity. Indeed, for the participants, the Wavegame provides an immersive entrance into Public Cloud Security. Meanwhile, for the organizers, the Wavegame offers a new practical experience in designing, building, and maintaining operational readiness for an infrastructure in the Public Cloud.