In the evolving landscape of AI governance and regulation, recent efforts have shifted from scattered and reactive measures to cohesive policy frameworks that foster innovation while safeguarding against potential misuse.

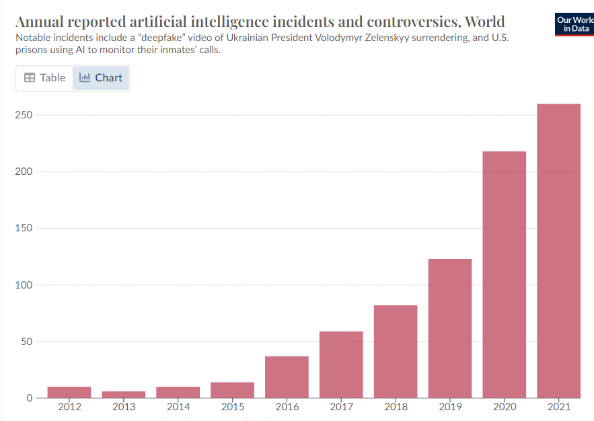

As AI becomes more integrated into our daily life, both public and private sectors have raised ethical concerns around issues of privacy, bias, accountability, and transparency.

Today, as governments actively craft AI guidance and legislation, policymakers face the challenge of delicately balancing the need to foster innovation and ensuring accountability. A regulatory framework that prioritizes innovation but relies too heavily on the private sector’s self-governance could lead to a lack of oversight and accountability. Conversely, while robust safeguards are essential to mitigate potential risks, an overly restrictive approach may stifle technological progress.

This whitepaper will explore the approaches proposed by the governments of the United States and the United Kingdom as they pertain to AI governance across both the public and private sectors.

American Approach to AI Regulation

In October of 2023, the White House published the AI Executive Order. The order specifies key near-term priorities of introducing reporting requirements for AI developers exceeding computing thresholds, launching research initiatives, developing frameworks for responsible AI use, and establishing AI governance within the federal government. Longer-term efforts focus on international cooperation, global standards, and AI safety.

On the side of ensuring accountability, the order calls for the Secretary of Commerce to enforce reporting provisions for companies developing dual-use AI foundation models, organizations acquiring large-scale computing clusters, and Infrastructure as a Service providers enabling foreign entities to conduct certain AI model training. While these criteria will likely exempt most small to medium sized AI companies from immediate regulations, large industry players like Open AI, Anthropic, and Meta could be affected if they surpass the computing threshold established by the order.

On the other side of fostering innovation, further sections of the order reaffirm the US government’s aim to promote AI innovation and competition – supporting R&D initiatives and public-private partnerships, provisioning streamlined visa processes to attract AI talent to the US, prioritizing AI-oriented recruitment within the federal government, clarifying IP issues related to AI, and preventing unlawful collusion.

Overall, the nature of the documents published by the US is mostly non-binding, indicating a strategy of encouraging the private sector to self-regulate and align to common AI best practices. In this approach, the White House has been persistent in its messaging that it is committed to nurturing innovation, research, and leadership in the domain, while also balancing with the need for a secure and responsible AI ecosystem.

The British Approach to AI Regulation

The Bletchley Declaration, agreed upon during the AI Safety Summit 2023 held at Bletchley Park, Buckinghamshire, marks a pioneering international effort towards ensuring the safe and responsible development of AI technologies. This declaration represents a commitment from 29 governments to collaborate on developing AI in a manner that is human-centric, trustworthy, and responsible, with the UK, US, China, and major European member states among the notable signatories. The focus is on “frontier AI,” which refers to highly capable, general-purpose AI models that could pose significant risks, particularly in areas such as cybersecurity and biotechnology.

The declaration emphasizes the need for governments to take proactive measures to ensure the safe development of AI, acknowledging the technology’s pervasive deployment across various facets of daily life including housing, employment, education, and healthcare. It calls for the development of risk-based policies, appropriate evaluation metrics, tools for safety testing, and building relevant public sector capability and scientific research.

In addition to the declaration, a policy paper on AI ‘Safety Testing’ was also signed by ten countries, including the UK and the US, as well as major technology companies. This policy paper outlines a broad framework for testing next-generation AI models by government agencies, promoting international cooperation, and enabling government agencies to develop their own approaches to AI safety regulation.

The key takeaways from the Bletchley Declaration include a clear signal from governments regarding the urgency to address the development of safe AI. However, how these commitments will translate into specific policy proposals and the role of the newly announced AI Safety Institute (AISI) in the UK’s regulatory landscape remain to be seen. The AISI’s mission is to minimize surprise from rapid and unexpected advances in AI, focusing on testing and evaluation of advanced AI systems, foundational AI safety research, and facilitating information exchange.

As they seek to establish themselves as AI leaders in the global community and set the direction for effective policymaking, both the US and the UK are navigating the balance between promoting AI innovation and ensuring ethical governance. While most of the current focus is on proposing guidelines and frameworks for the safe and responsible use of AI, the reference to potential future regulations across both documents should serve as a wake-up call for companies to start aligning their practices with the principles and recommendations outlined.

To stay ahead of the curve, organizations should develop robust methodologies to monitor AI risks effectively. This involves adapting their AI strategy to prioritize risk mitigation, identifying potential harms that may arise from the deployment of AI systems, and preparing for forthcoming regulatory measures by implementing a secure and comprehensive risk management program.

However, the US and UK opportunist approach to AI legislation is not followed by all. China chose a targeted and evolutive approach by writing a law on Generative AI that came into effect in 2023. Finally, in Europe, the AI Act shows that the EU doesn’t want to let AI technologies go out of hand.