On March 13, 2024, the European Parliament adopted the final version of the European Artificial Intelligence Act, also known as the “AI Act”[1]. Nearly three years after the publication of the first version of the text, the twenty-seven countries of the European Union reached an historic agreement on the world’s first harmonized rules on artificial intelligence. The final version of the text is expected on April 22, 2024, prior to publication in the Official Journal of the European Union.

The AI Act aims to ensure that artificial intelligence systems and models marketed within the European Union are used ethically, safely, and in compliance with EU fundamental rights. The Act has also been drafted to strengthen the competitiveness and innovation of AI companies. The AI Act will reduce the risk of abuses, reinforcing user confidence in its use and adoption.

France Digitale, Europe’s largest startup association, Gide, an international French business law firm, and Wavestone, have joined forces to co-author a white paper to help you understand and apply the European AI Act: AI Act: Keys to Understanding and Implementing the European Law on Artificial Intelligence.

In this publication, France Digitale, Gide, and Wavestone share their vision of the AI Act, from the types of systems affected to the major stages of compliance.

A few definitions to get you started

The AI Act makes a distinction between artificial intelligence systems and models, which it defines as follows:

- An Artificial Intelligence System (AIS) is an automated system designed to operate at different levels of autonomy and which can generate predictions, recommendations, or decisions that influence physical or virtual environments.

- A General-Purpose AI system (GPAI) is a versatile AI system capable of performing a wide range of distinct tasks. It can be integrated into a variety of systems or applications, demonstrating great flexibility and adaptability.

Players concerned

The AI Act concerns all suppliers, distributors, or deployers of AI systems and models, including legal entities (companies, foundations, associations, research laboratories, etc.), headquartered in the European Union or outside the European Union, who market their AI system or model within the European Union.

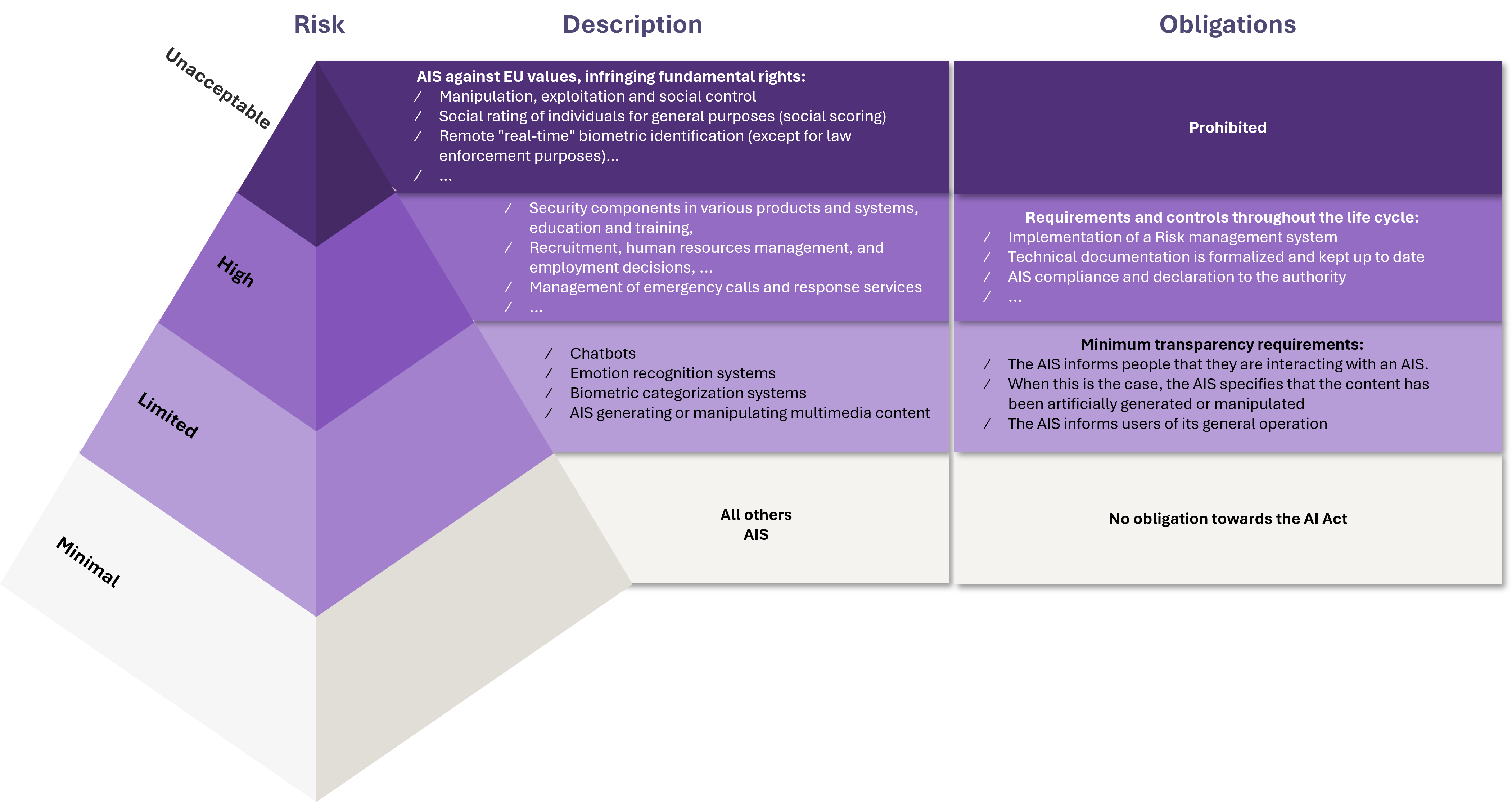

The level of regulation and associated obligations depend on the level of risk presented by the AI system or model.

Classification of AIS According to Risk Level

The AI Act introduces a classification of artificial intelligence systems. AIS must be analysed and prioritized according to the risk they present to users: minimal, low, high, and unacceptable. The different levels of risk imply more or less obligations.

Unacceptable-risk AIS are prohibited by the AI Act, while minimal-risk AIS are not subject to the Act. High-risk and low-risk AIS are therefore the focus of most of the measures set out in the regulations.

Specific obligations apply to generative AI and to the development of general-purpose AI models (e.g., Large Language Models or “LLMs”), depending on various factors: computing power, number of users, use of an open-source model, etc.

In order to meet the new challenges posed by the emergence of generative artificial intelligence, the AI Act includes specific cybersecurity measures aimed at reducing the risks generated by the development of generative artificial intelligence.

In a future publication, we’ll be taking a closer look at the cybersecurity aspects of the AI Act. In the meantime, you can find our latest publications on AI and cybersecurity: “Securing AI: The New Cybersecurity Challenges”, “The industrialization of AI by cybercriminals: should we really be worried?”, “Language as a sword: the risk of prompt injection on AI Generative”.

[1] France agrees to ratify the EU Artificial Intelligence Act after seven months of resistance (lemonde.fr).