The dawn of generative Artificial Intelligence (GenAI) in the corporate sphere signals a turning point in the digital narrative. It is exemplified by pioneering tools like OpenAI’s ChatGPT (which found its way into Bing as “Bing Chat, leveraging the GPT-4 language model) and Microsoft 365’s Copilot. These technologies have graduated from being mere experimental subjects or media fodder. Today, they lie at the heart of businesses, redefining workflows and outlining the future trajectory of entire industries.

While there have been significant advancements, there are also challenges. For instance, Samsung’s sensitive data was exposed on ChatGPT by employees (the entire source code of a database download program)[1]. Compounding these challenges, ChatGPT [OpenAI] itself underwent a security breach that affected over 100 000 users between June 2022 and May 2023, with those compromised credentials now being traded on the Dark web[2].

At this digital crossroad, it’s no wonder that there’s both enthusiasm and caution about embracing the potential of generative AI. Given these complexities, it’s understandable why many grapple with determining the optimal approach to AI. With that in mind, the article aims to address the most representative questions asked by our clients.

Question 1: Is Generative AI just a buzz?

AI is a collection of theories and techniques implemented with the aim of creating machines capable of simulating the cognitive functions of human intelligence (vision, writing, moving…). A particularly captivating subfield of AI is “Generative AI”. This can be defined as a discipline that employs advanced algorithms, including artificial neural networks, to autonomously craft content, whether it’s text, images, or music. Moving on from your basic banking chatbot answering aside all your question, GenAI not only just mimics capabilities in a remarkable way, but in some cases, enhances them.

Our observation on the market: the reach of generative AI is broad and profound. It contributes to diverse areas such as content creation, data analysis, decision-making, customer support and even cybersecurity (for example, by identifying abnormal data patterns to counter threats). We’ve observed 3 fields where GenAI is particularly useful.

Marketing and customer experience personalisation

GenAI offers insights into customer behaviours and preferences. By analysing data patterns, it allows businesses to craft tailored messages and visuals, enhancing engagement, and ensuring personalized interactions.

No-code solutions and enhanced customer support

In today’s rapidly changing digital world, the ideas of no-code solutions and improved customer service are increasingly at the forefront. Bouygues Telecom is a good example of a leveraging advanced tools. They are actively analysing voice interactions from recorded conversations between advisors and customers, aiming to improve customer relationships[3]. On a similar note, Tesla employs the AI tool “Air AI” for seamless customer interaction, handling sales calls with potential customers, even going so far as to schedule test drives.

As for coding, an interesting experiment from one of our clients stands out. Involving 50 developers, the test found that 25% of the AI-generated code suggestions were accepted, leading to a significant 10% boost in productivity. It is still early to conclude on the actual efficiency of GenAI for coding, but the first results are promising and should be improved. However, the intricate issue of intellectual property rights concerning this AI-generated code continues to be a topic of discussion.

Documentary watch and research tool

Using AI as a research tool can help save hours in domains where regulatory and documentary corpus are very extensive (e.g.: financial sector). At Wavestone, we internally developed two AI tools. The first, CISO GPT, allows users to ask specific security questions in their native language. Once a question is asked, the tool scans through extensive security documentation, efficiently extracting and presenting relevant information. The second one, a Library and credential GPT, provides specific CVs from Wavestone employees, as well as references from previous engagements for the writing of commercial proposals.

However, while tools like ChatGPT (which draws data from public databases) are undeniably beneficial, the game-changing potential emerges when companies tap into their proprietary data. For this, companies need to implement GenAI capabilities internally or setup systems that ensure the protection of their data (cloud-based solution like Azure OpenAI or proprietary models). From our standpoint, GenAI is worth more than just the buzz around it and is here to stay. There are real business applications and true added value, but also security risks. Your company needs to kick-off the dynamic to be able to implement GenAI projects in a secure way.

Question 2: What is the market reaction to the use of ChatGPT?

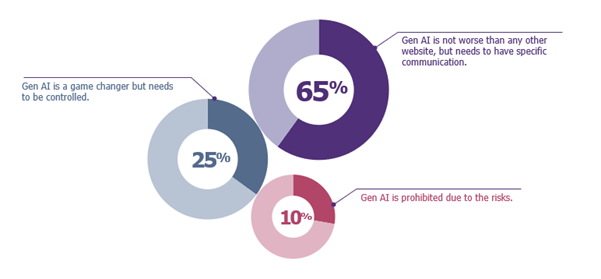

To delve deeper into the perspective of those at the forefront of cybersecurity, we’ve asked our client’s CISO’s, their opinions on the implications and opportunities of GenAI. Therefore, the following graph illustrates the opinions of CISOs on this subject.

Based on our survey, the feedback from the CISOs can be grouped into three distinct categories:

The Pragmatists (65%)

Most of our respondents recognize the potential data leakage risks with ChatGPT, but they equate them to risk encountered on forums or during exchanges on platforms or forums such as Stack Overflow (for developers). They believe that the risk of data leaks hasn’t significantly changed with ChatGPT. However, the current buzz justifies dedicated sensibilization campaigns to emphasize the importance of not using company-specific or sensitive data.

The Visionaries (25%)

A quarter of the respondents view ChatGPT as a ground-breaking tool. They’ve noticed its adoption in departments such as communication and legal. They’ve taken proactive steps to understanding its use (which data, which use cases) and have subsequently established a set of guidelines. This is a more collaborative approach to define a use case framework.

The Sceptics (10%)

A segment of the market has reservations about ChatGPT. To them, it’s a tool that’s too easy to misuse, receives excessive media attention and carries inherent risks, according to various business sectors. Depending on your activity, this can be relevant when judging that the risk of data leakage and loss of intellectual property is too high compared to the potential benefits.

Question 3: What are the risks of Generative AI?

In evaluating the diverse perspectives on generative AI within organizations, we’ve classified the concerns into four distinct categories of risks, presented from the least severe to the most critical:

Content alteration and misrepresentation

Organizations using generative AI must safeguard the integrity of their integrated systems. When AI is maliciously tampered with, it can distort genuine content, leading to misinformation. This can produce biased outputs, undermining the reliability and effectiveness of AI-driven solutions. Specifically, for Large Language Models (LLMs) like GenAI, there’s a notable concern of prompt injections. To mitigate this, organizations should:

- Develop a malicious input classification system that assesses the legitimacy of a user’s input, ensuring that only genuine prompts are processed.

- Limit the size and change the format of user inputs. By adjusting these parameters, the chances of successful prompt injection are significantly reduced.

Deceptive and manipulative threats

Even if an organization decides to prohibit the use of generative AI, it must remain vigilant about the potential surge in phishing, scams and deepfake attacks. While one might argue that these threats have been around in the cybersecurity realm for some time, the introduction of generative AI intensifies both their frequency and sophistication.

This potential is vividly illustrated through a range of compelling examples. For instance, Deutsche Telekom released an awareness video that demonstrates the ability, by using GenAI, to age a young girl’s image from photos/videos available on social media.

Furthermore, HeyGen is a generative AI software capable of dubbing videos into multiple languages while retaining the original voice. It’s now feasible to hear Donald Trump articulating in French or Charles de Gaulle conversing in Portuguese.

These instances highlight the potential for attackers to use these tools to mimic a CEO’s voice, create convincing phishing emails, or produce realistic video deepfakes, intensifying detection and defence challenges.

For more information on the use of GenAI by cybercriminals, consult the dedicated RiskInsight article.

Data confidentiality and privacy concerns

If organizations choose to allow the use of generative AI, they must consider that the vast data processing capabilities of this technology can pose unintended confidentiality and privacy risks. First, while these models excel in generating content, they might leak sensitive training data or replicate copyrighted content.

Furthermore, concerning data privacy rights, if we examine ChatGPT’s privacy policy, the chatbot can gather information such as account details, identification data extracted from your device or browser, and information entered in the chatbot (that can be used to train the generative AI)[4]. According to article 3 (a) of OpenAI’s general terms and conditions, input and output belong to the user. However, since these data are stored and recorded by Open AI, it poses risks related to intellectual property and potential data breaches (as previously noted in the Samsung case). Such risks can have significant reputational and commercial impact on your organization.

Precisely for these reasons, OpenAI developed the ChatGPT Business subscription, which provides enhanced control over organizational data (such as AES-256 encryption for data at rest, TLS 1.2+ for data in transit, SSO SAML authentication, and a dedicated administration console)[5]. But in reality, it’s all about the trust you have in your provider and the respect of contractual commitments. Additionally, there’s the option to develop or train internal AI models using one’s own data for a more tailored solution.

Model vulnerabilities and attacks

As more organizations use machine learning models, it’s crucial to understand that these models aren’t fool proof. They can face threats that affect their reliability, accuracy or confidentiality, as it will be explained in the following section.

Question 4: How can an AI model be attacked?

AI introduces added complexities atop existing network and infrastructure vulnerabilities. It’s crucial to note that these complexities are not specific to generative AI, but they are present in various AI models. Understanding these attack models is essential to reinforcing defences and ensuring the secure deployment of AI. There are three main attack models (non-exhaustive list):

For detailed insights on vulnerabilities in Large Language Models and generative AI, refer to the “OWASP Top 10 for LLM” by the Open Web Application Security Project (OWASP).

Evasion attacks

These attacks target AI by manipulating the inputs of machine learning algorithms to introduce minor disturbances that result in significant alterations to the outputs. Such manipulations can cause the AI model to classify inaccurately or overlook certain inputs. A classic example would be altering signs to deceive AI self-driving cars (have identify a “stop” sign into a “priority” sign). However, evasion attacks can also apply to facial recognition. One might use subtle makeup patterns, strategically placed stickers, special glasses, or specific lighting conditions to confuse the system, leading to misidentification.

Moreover, evasion attacks extend beyond visual manipulation. In voice command systems, attackers can embed malicious commands within regular audio content in such a way that they’re imperceptible to humans but recognizable by voice assistants. For instance, researchers have demonstrated adversarial audio techniques targeting speech recognition systems, like those in voice-activated smart speaker systems such as Amazon’s Alexa. In one scenario, a seemingly ordinary song or commercial could contain a concealed command instructing the voice assistant to make an unauthorized purchase or divulge personal information, all without the user’s awareness[6].

Poisoning

Poisoning is a type of attack in which the attacker altered data or model to modify the ML algorithm’s behaviour in a chosen direction (e.g to sabotage its results, to insert a backdoor). It is as if the attacker conditioned the algorithm according to its motivations. Such attacks are also called causative attacks.

In line with this definition, attackers use causative attacks to guide a machine learning algorithm towards their intended outcome. They introduced malicious samples into the training dataset, leading the algorithm to behave in unpredictable ways. A notorious example is Microsoft’s chatbot, TAY, that was unveiled on Twitter in 2016. Designed to emulate and converse with American teenagers, it soon began acting like a far-right activist[7]. This highlights the fact that, in their early learning stages, AI systems are susceptible to the data they encounter. 4Chan users intentionally poisoned TAY’s data with their controversial humour and conversations.

However, data poisoning can also be unintentional, stemming from biases inherent in the data sources or the unconscious prejudices of those curating the datasets. This became evident when early facial recognition technology had difficulties identifying darker skin tones. This underscores the need for diverse and unbiased training data to guard against both deliberate and inadvertent data distortions.

Finally, the proliferation of open-source AI algorithms online, such as those on platforms like Hugging Face, presents another risk. Malicious actors could modify and poison these algorithms to favour specific biases, leading unsuspecting developers to inadvertently integrate tainted algorithms into their projects, further perpetuating biases or malicious intents.

Oracle attacks

This type of attack involves probing a model with a sequence of meticulously designed inputs while analysing the outputs. Through the application of diverse optimization strategies and repeated querying, attackers can deduce confidential information, thereby jeopardizing both user privacy, overall system security, or internal operating rules.

A pertinent example is the case of Microsoft’s AI-powered Bing chatbot. Shortly after its unveiling, a Stanford student, Kevin Liu, exploited the chatbot using a prompt injection attack, leading it to reveal its internal guidelines and code name “Sidney”, even though one of the fundamental internal operating rules of the system was to never reveal such information[8].

A previous RiskInsight article showed an example of Evasion and Oracle attacks and explained other attack models that are not specific to AI, but that are nonetheless an important risk for these technologies.

Question 5: What is the status of regulations? How is generative AI regulated?

Since our 2022 article, there has been significant development in AI regulations across the globe.

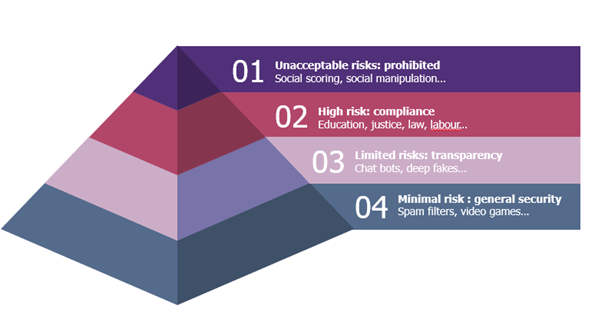

EU

The EU’s digital strategy aims to regulate AI, ensuring its innovative development and use, as well as the safety and fundamental rights of individuals and businesses regarding AI. On June 14, 2023, the European Parliament adopted and amended the proposal for a regulation on Artificial Intelligence, categorizing AI risks into four distinct levels: unacceptable, high, limited, and minimal[9].

US

The White House Office of Science and Technology Policy, guided by diverse stakeholder insights, presented the “Blueprint for an AI Bill of Rights”[10]. Although non-binding, it underscores a commitment to civil rights and democratic values in AI’s governance and deployment.

China

China’s Cyberspace Administration, considering rising AI concerns, proposed the Administrative Measures for Generative Artificial Intelligence Services. Aimed at securing national interests and upholding user rights, these measures offer a holistic approach to AI governance. Additionally, the measures seek to mitigate potential risks associated with Generative AI services, such as the spread of misinformation, privacy violations, intellectual property infringement, and discrimination. However, its territorial reach might pose challenges for foreign AI service providers in China[11].

UK

The United Kingdom is charting a distinct path, emphasizing a pro-innovation approach in its National AI Strategy. The Department for Science, Innovation & Technology released a white paper titled “AI Regulation: A Pro-Innovation Approach”, with a focus on fostering growth through minimal regulations and increased AI investments. The UK framework doesn’t prescribe rules or risk levels to specific sectors or technologies. Instead, it focuses on regulating the outcomes AI produces in specific applications. This approach is guided by five core principles: safety & security, transparency, fairness, accountability & governance, and contestability & redress[12].

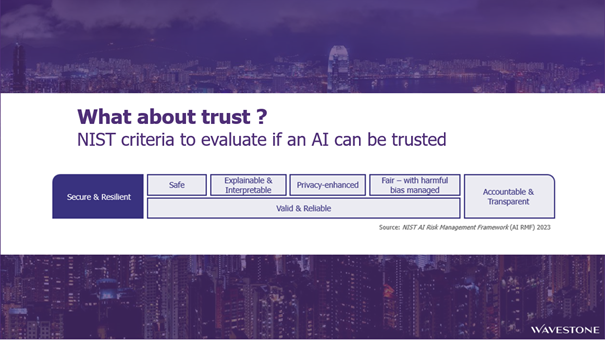

Frameworks

Besides formal regulations, there are several guidance documents, such as NIST’s AI Risk Management Framework and ISO/IEC 23894, that provide recommendations to manage AI-associated risks. They focus on criteria aimed at trusting the algorithms in fine, and this is not just about cybersecurity! It’s about trust.

With such a broad regulatory landscape, organizations might feel overwhelmed. To assist, we suggest focusing on key considerations when integrating AI into operations, in order to setup the roadmap towards being compliant.

- Identify all existing AI systems within the organization and establish a procedure/protocol to identify new AI endeavours.

- Evaluate AI systems using criteria derived from reference frameworks, such as NIST.

- Categorize AI systems according to the AI Act’s classification (unacceptable, high, low or minimal).

- Determine the tailored risk management approach for each category.

Bonus Question: This being said, what can I do right now?

As the digital landscape evolves, Wavestone emphasizes a comprehensive approach to generative AI integration. We advocate that every AI deployment undergo a rigorous sensitivity analysis, ranging from outright prohibition to guided implementation and stringent compliance. For systems classified as high risk, it’s paramount to apply a detailed risk analysis anchored in the standards set by ENISA and NIST. While AI introduces a sophisticated layer, foundational IT hygiene should never be side lined. We recommend the following approach:

- Pilot & Validate: Begin by gauging the transformative potential of generative AI within your organizational context. Moreover, it’s essential to understand the tools at your disposal, navigate the array of available choices, and make informed decisions based on specific needs and use cases.

- Strategic Insight: Based on our client CISO survey, ascertain your ideal AI adoption intensity. Do you resonate with the 10%, 65% or 25% adoption benchmarks shared by your industry peers?

- Risk Mitigation: Ground your strategy in a comprehensive risk assessment, proportional to your intended adoption intensity.

- Policy Formulation: Use your risk-benefit analysis as a foundation to craft AI policies that are both robust and agile.

- Continuous Learning & Regulatory Vigilance: Maintain an unwavering commitment to staying updated with the evolving regulatory landscape. Both locally and globally, it’s crucial to stay informed about the latest tools, attack methods, and defensive strategies.

[1] Des données sensibles de Samsung divulgués sur ChatGPT par des employés (rfi.fr)

[2] https://www.phonandroid.com/chatgpt-100-000-comptes-pirates-se-retrouvent-en-vente-sur-le-dark-web.html

[3] Bouygues Telecom mise sur l’IA générative pour transformer sa relation client (cio-online.com)

[4] Quelles données Chat GPT collecte à votre sujet et pourquoi est-ce important pour votre vie privée en ligne ? (bitdefender.fr)

[5] OpenAI lance un ChatGPT plus sécurisé pour les entreprises – Le Monde Informatique

[6] Selective Audio Adversarial Example in Evasion Attack on Speech Recognition System | IEEE Journals & Magazine | IEEE Xplore

[7] Not just Tay: A recent history of the Internet’s racist bots – The Washington Post

[8] Microsoft : comment un étudiant a obligé l’IA de Bing à révéler ses secrets (phonandroid.com)

[9] Artificial intelligence act (europa.eu)

[10] https://www.whitehouse.gov/wp-content/uploads/2022/10/Blueprint-for-an-AI-Bill-of-Rights.pdf

[11] https://www.china-briefing.com/news/china-to-regulate-deep-synthesis-deep-fake-technology-starting-january-2023/

[12] A pro-innovation approach to AI regulation – GOV.UK (www.gov.uk)