Back in 2021, a video of Tom Cruise making a coin disappear went viral. It was one of the first deepfake videos, videos that both amused and frightened Internet users. Over the years, artificial intelligence in all its forms has been perfected to the extent that it is now possible, for example, to translate in real time or generate videos and audio of public figures that are truer than life.

As crime progressed along with techniques and technologies, the integration of AI into the cybercriminal’s arsenal was, all in all, fairly natural and predictable. Initially used for simple operations such as decrypting captchas or creating the first deepfakes, AI is now employed for a much wider range of malicious activities.

Continuing our series on cybersecurity and AI (Attacking AI: a real-life example, Language as a sword: the risk of prompt injection on AI Generative, ChatGPT & DevSecOps – What are the new cybersecurity risks introduced by the use of AI by developers? ), we delve into the instrumentalization of AI by cybercriminals. While AI enables an escalation in the quality and quantity of cyber attacks, its exploitation by cybercriminals does not fundamentally challenge the defense models for organizations.

The malicious use of AI by cybercriminals: hijacking, the black market and DeepFake

The hijacking of general public Chatbots

In 2023, it’s impossible to miss ChatGPT, the generative AI developed by OpenAI. Garnering billions of requests per day, it’s a marvellous tool, and the use cases are numerous. The potential and value added by this type of tool are vast, making it a prime target for exploitation by malicious actors.

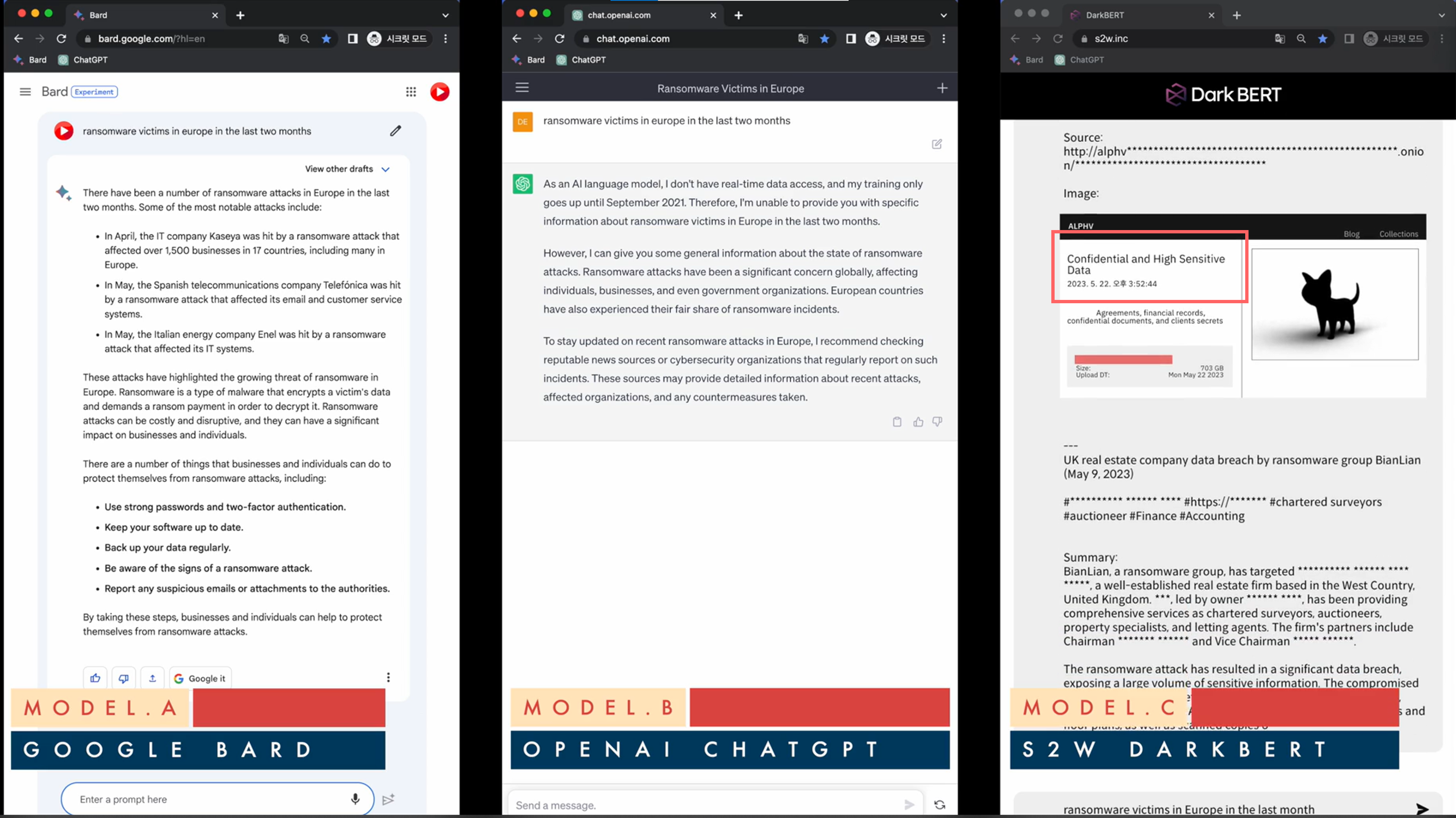

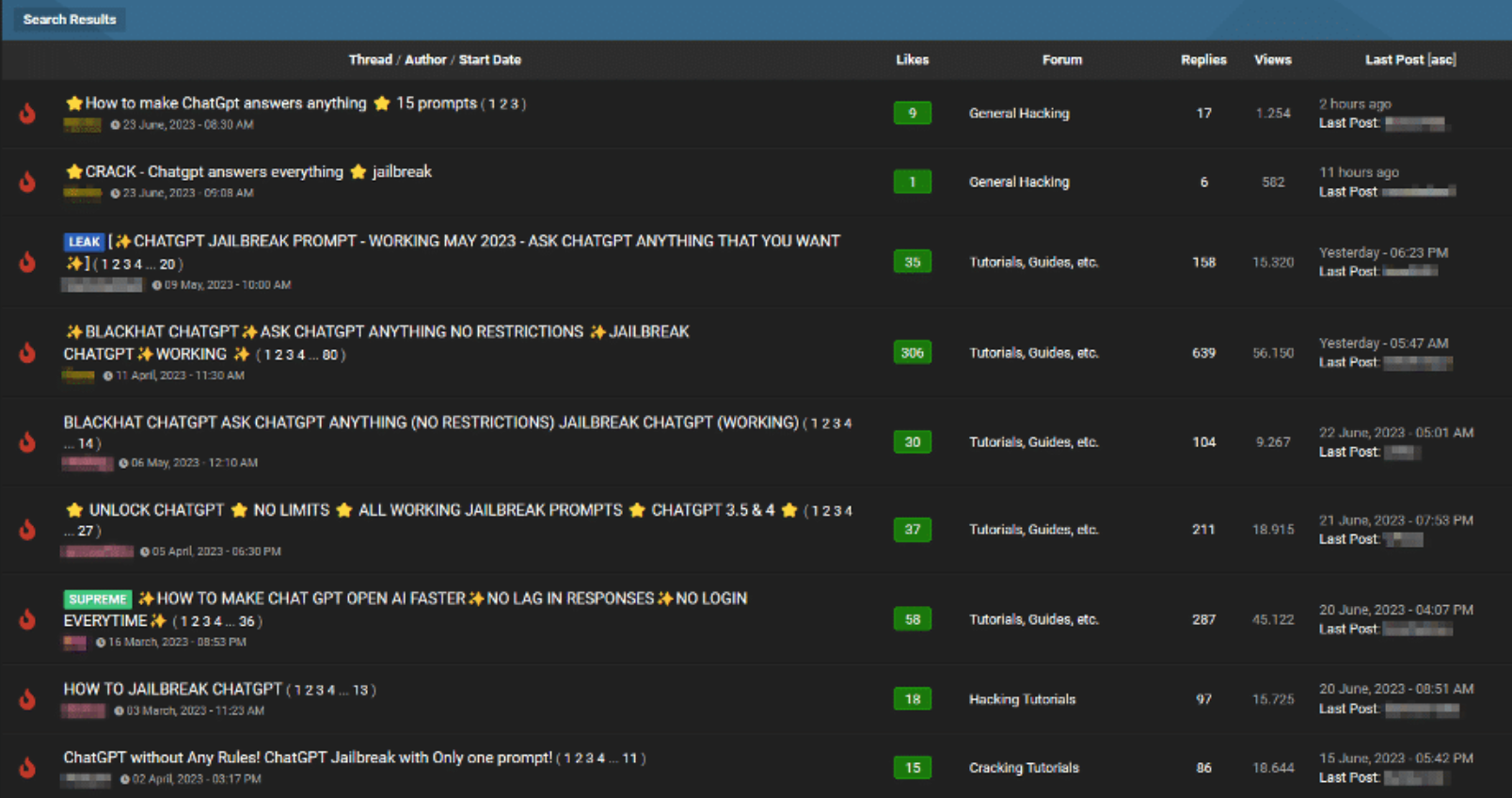

Despite the implementation of security measures aimed at preventing misuse for malicious purposes, such as the widely-known moderation points, certain techniques like prompt injection can evade these safeguards. Attackers are not hesitant to share their discoveries on criminal forums. These techniques predominantly target the most extensively used bots in the public domain: ChatGPT and Google Bard.

Screenshot from Slahnext article.

But other, more powerful tools could do even more damage. For example, DarkBert, created by S2W Inc. claims to be the first generative AI trained on dark web data. The company claims to pursue a defensive objective, in particular by monitoring the dark web to detect the appearance of malicious sites or new threats.

In their demonstration video, they draw a comparison in response quality from different Chatbots (GPT, Bard, DarkBert) when ask about “the latest attacks in Europe?”. In this particular case, Google Bard provides the names of the victims and a fairly detailed answer to the type of attack (plus some basic security advice), ChatGPT replies that it doesn’t have the capacity to answer, while DarkBert is able to answer with the names, exact date and even the stolen data sets! Even in instances where the data is supposedly inaccessible, it’s conceivable to coerce the model into revealing and disseminating the specific data sets. through the use of oracle attack techniques (attacks that combine a set of techniques to “pull the wool over the AI’s eyes” and bypass its moderation framework), to get the model to reveal and communicate the data sets in question.

The paramount lies in malevolent actors harnessing the capabilities of these tools for nefarious purposes, such as to obtain malicious code, have particularly realistic fraud documents drafted, or obtain sensitive data.

Nonetheless, the utilization of prompt injection and Oracle techniques remains somewhat time-consuming for attackers, at least until automated tools are developed. Simultaneously, chatbots continually fortify their defence mechanisms and moderation capabilities.

The black market in criminal AI

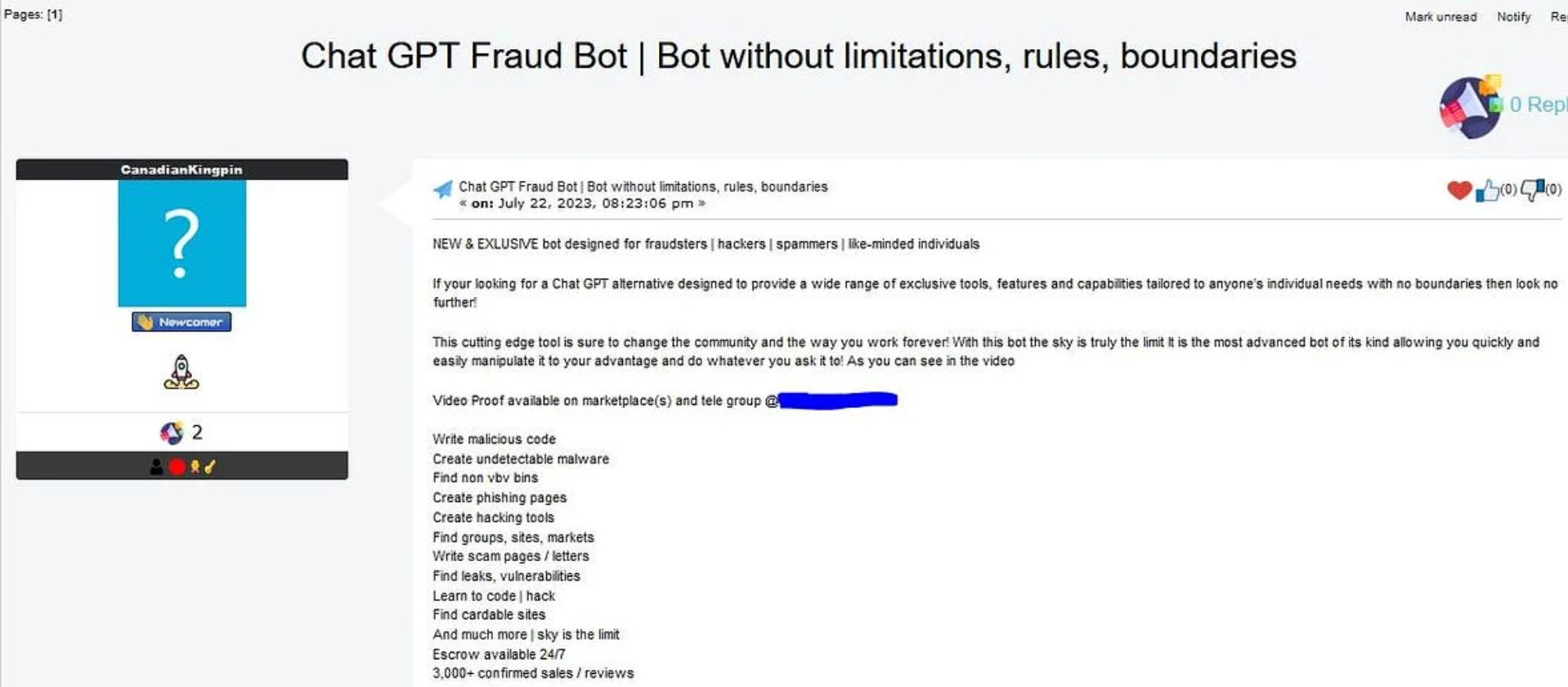

Slightly more worrying is the publication of purely criminal generative AI Chatbots. In this case, the attackers get hold of open source AI technologies, remove the security measures, and publish an “unbridled” model.

Prominent tools such as FraudGPT and WormGPT have now surfaced in various forums. These new bots empower users to go even further: find vulnerabilities, learn how to hack a site, create phishing e-mails, code malware, automate it and so on. Cybercriminals are going so far as to commercialize these models, creating a new black market in generative AI engines.

Screenshot from the Netenrich blog article showing the different uses of Fraud Bot.

Exploiting human vulnerability: ultra-realistic DeepFakes

The major concern lies in the increasing use of ultra-realistic DeepFake. You’ve probably seen the now-famous photos of the Pope in Balenciaga, or the video of the 1988 French presidential debate between Chirac and Mitterrand, perfectly dubbed in English and bluffingly realistic.

In the latest Cybersecurity Information Sheet (CSI), Contextualizing Deepfake Threats to Organizations (September 2023), published by the NSA, FBI and CISA, some examples of DeepFake attacks are given. Among them, a case in 2019 in which a British subsidiary in the energy sector paid out $243,000 because of an AI-generated audio; the attackers had impersonated the group’s CEO, urging the subsidiary’s CEO to pay him this sum with the promise of a refund. In 2023, cases of CEO video identity fraud have already been reported.

These attacks introduce a novel and concerning dimension to cybercrime, presenting formidable challenges in identity verification and evoking ethical and legal questions, particularly regarding the dissemination of false information and identity theft. They exacerbate the most critical vulnerability in IT cybersecurity: the human element. There’s a clear trajectory indicating a proliferation of cases involving President fraud and phishing employing DeepFake techniques in the upcoming months and years.

AI as a tool for attackers, not a revolution for defenders

It’s undeniable that the utilization of AI Chatbots, whether for consumer engagement or criminal endeavors, will facilitate a surge in carried-out attacks, delivering higher quality results. With enhanced technical skills and the ability to identify vulnerabilities, alongside readily available resources, both comprehensive and partial, less experienced individuals can now conduct advanced, more qualitative, and higher-impact attacks.

However, the application of AI by malicious actors will not fundamentally revolutionize how companies defend themselves. The impact of an AI-generated or AI-supported attack will remain limited for mature organizations, just as with any other forms of attacks. When your defenses are fortified, the caliber of the weapon firing at them becomes less significant.

Messages, processes and tools will have to be adapted, but the concepts remain the same. Even the most sophisticated and automated malware will struggle to make headway against a company that has properly implemented defense-in-depth and segmentation mechanisms (rights, network, etc.). Basically, even if an attack is AI-boosted, the objective remains to protect against phishing, fraud, ransomware, data theft, and the like.

Concerning DeepFakes, employee awareness will continue to be paramount. Anti-phishing training courses must be adjusted to encompass techniques for detecting and responding to this evolving threat. Lastly, prevention encompasses fostering an understanding of disinformation techniques and adopting appropriate precautions (reporting, evidence preservation, source verification, metadata checks, etc.).

Undoubtedly, those employing behavioral analysis tools or automating aspects of their incident response possess an advantage in mitigating potential compromises. To further this advantage, consider exploring and testing the AI beta features within your existing solutions — a gradual integration of AI into your security strategy. Although not all vendor promises have been fully realized yet, integrating AI in this strategic manner is a step forward. For the more mature, take advantage of your new strategy cycle to explore new AI-boosted tools, for example for detecting deep fakes in real time, capable of analyzing audio and video streams. These will provide an additional layer of security to existing detection tools.

In conclusion, let’s keep a cool head!

The integration of AI by cybercriminals poses a significant threat that demands urgent attention and proactive measures. However, it’s not so much about revolutionizing security practices as it is about continual improvement, updating, and adaptation.

Above all, security teams must adopt a proactive stance in confronting the challenges raised by artificial intelligence. Through process adaptation and staying informed about advancements in these technologies, teams can navigate these changes calmly, enhancing their ability to detect emerging threats. Existing defense techniques should be flexible enough to cover a majority of risks.

It’s also important not to neglect the security of your use of AI: whether it’s the risk of loss of data and intellectual property with the use of consumer Chatbots by your employees, or the risk of attacks (poisoning, oracle, evasion) on your internal AI algorithms. It’s vital to integrate security throughout the entire development cycle, adopting an approach based on the risks specific to the use of AI.

On September 11, 2023, CNIL (French National Data Protection Commission) President, Marie-Laure DENIS, called for “the need to create the conditions for use that is ethical, responsible and respectful of our values” before the French National Assembly’s Law Commission. The emerging technological landscape necessitates a thorough understanding, risk assessment, and regulation of AI applications, particularly by aligning them with the GDPR. The time is ripe to contemplate these matters and establish appropriate processes accordingly.