Since the beginning of its theorisation in the 1950s at the Dartmouth Conference[1] , Artificial Intelligence (AI) has undergone significant development. Today, thanks to advancements and progress in various technological fields such as cloud computing, we find it in various everyday uses. AI can compose music, recognise voices, anticipates our needs, drive cars, monitor our health, etc.

Naturally, the development of AI gives rise to many fears. For example, that AI will make innacurate computations leading to accidents and other incidents (autonomous car accidents for example), or that it will lead to a violation of the personal data and could potentially manipulate that data (fear largely fuelled by the scandals surrounding major market players[2] ).

In the absence of clear regulations in the field of AI, Wavestone wanted to study, for the purpose of anticipating future needs, who are the actors at the forefront of publishing and developing texts on the framework of AI, what are these texts, the ideas developed in them and what impacts on the security of AI systems can be anticipated.

AI regulation: the global picture

AI legislation

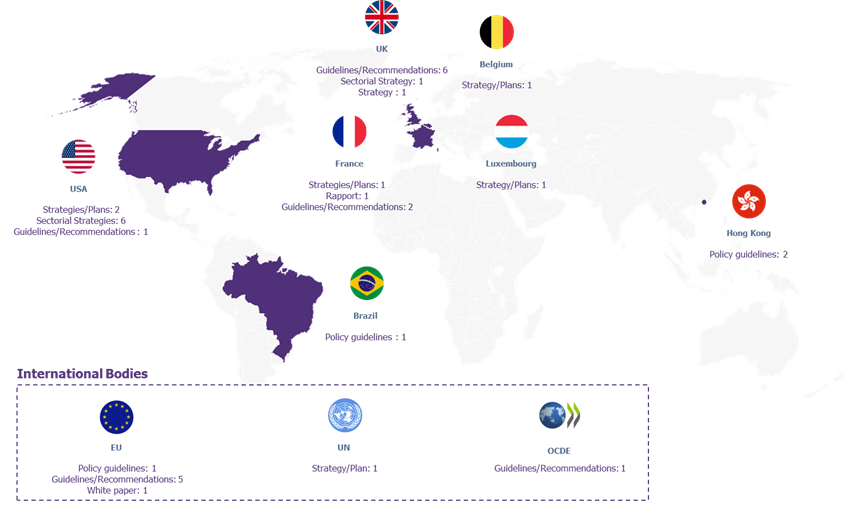

In the body of texts relating to AI regulation, there are no legislative texts to date [3][4]. Nevertheless, some texts generally formalize a set of broad guidelines for developing a normative framework for AI. There are, for example, guidelines/recommendations, strategic plans, or white papers.

They emerge mainly from the United States, Europe, Asia, or major international entities:

Figure 1 Global overview of AI texts[5]

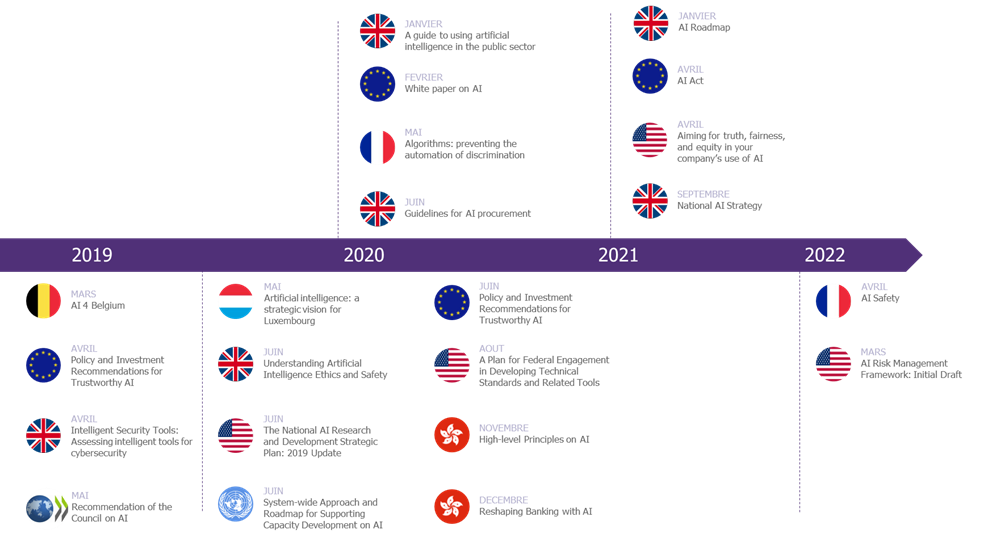

And their pace has not slowed down in recent years. Since 2019, more and more texts on AI regulation have been produced:

Figure 2 Chronology of the main texts

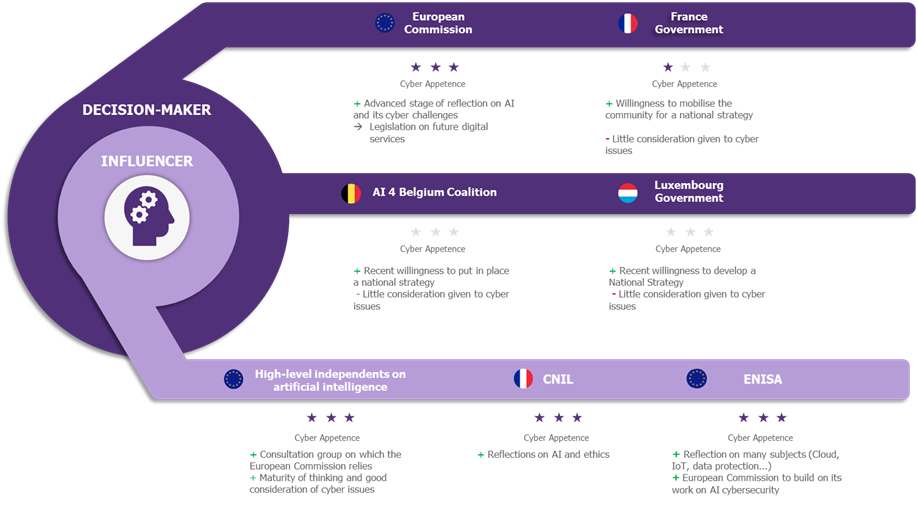

Two types of actors carry these texts with varying perspectives of cybersecurity

The texts are generally carried by two types of actors:

- Decision makers. That is, bodies whose objective is to formalise the regulations and requirements that AI systems will have to meet.

- That is, bodies/organisations that have some authority in the field of AI.

At the EU level, decision-makers such as the European Commission or influencers such as ENISA are of key importance in the development of regulations or best practices in the field of AI development.

Figure 3 Key players in Europe

In general, the texts address a few different issues. For example, they provide strategies which can be adopted or guidelines on AI ethics. They are addressed to both governments and companies and occasionally target specific sectors such as the banking sector.

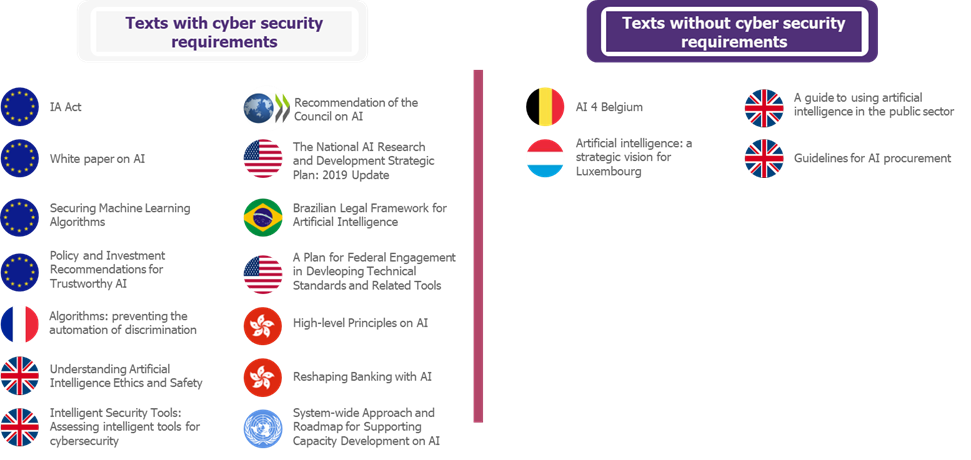

From a cyber security point of view, the texts are heterogeneous. The following graph represents the cyber appetence of the texts:

Figure 4 Text corpus between 2018 and 2021

What the texts say about Cybersecurity

As shown in Figure 4, a significant number of texts propose requirements related to cyber security. This is partly because AI has functional specificities that need to be addressed by cyber requirements. To go into the technical details of the texts, let us reduce AI to one of its most uses today: Machine Learning (Details of how Machine Learning works are provided in Annex I : Machine Learning).

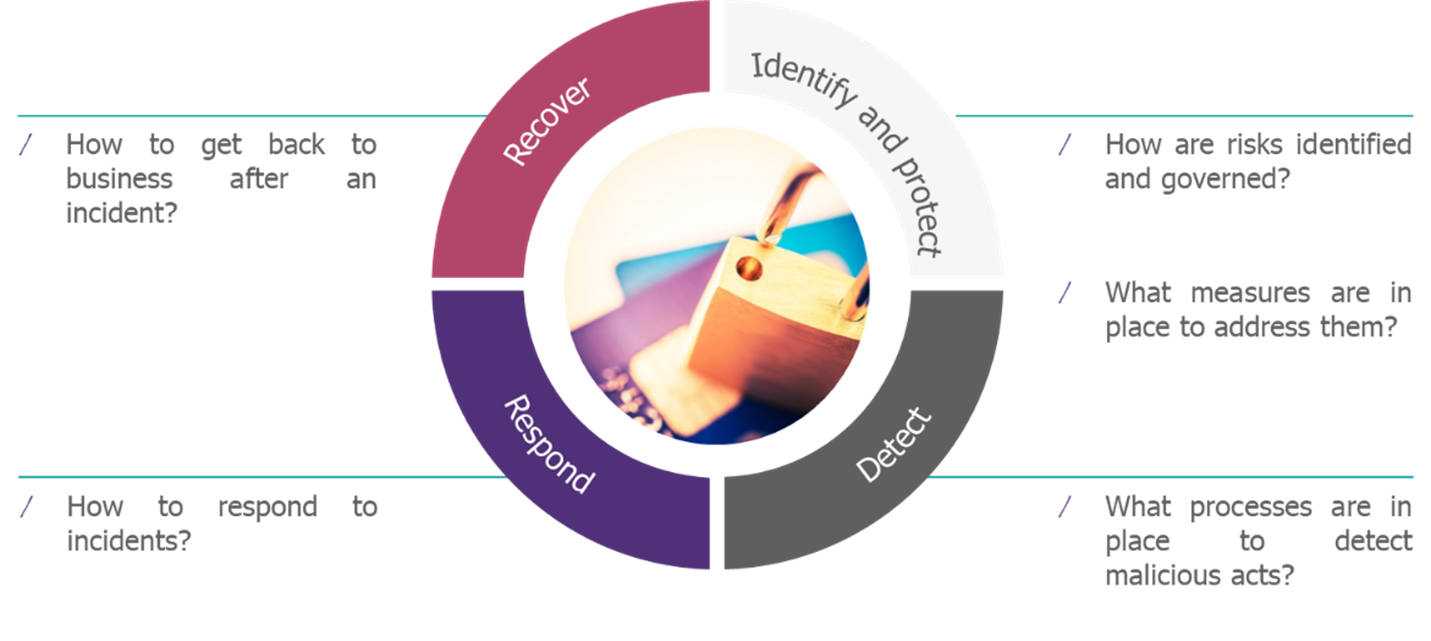

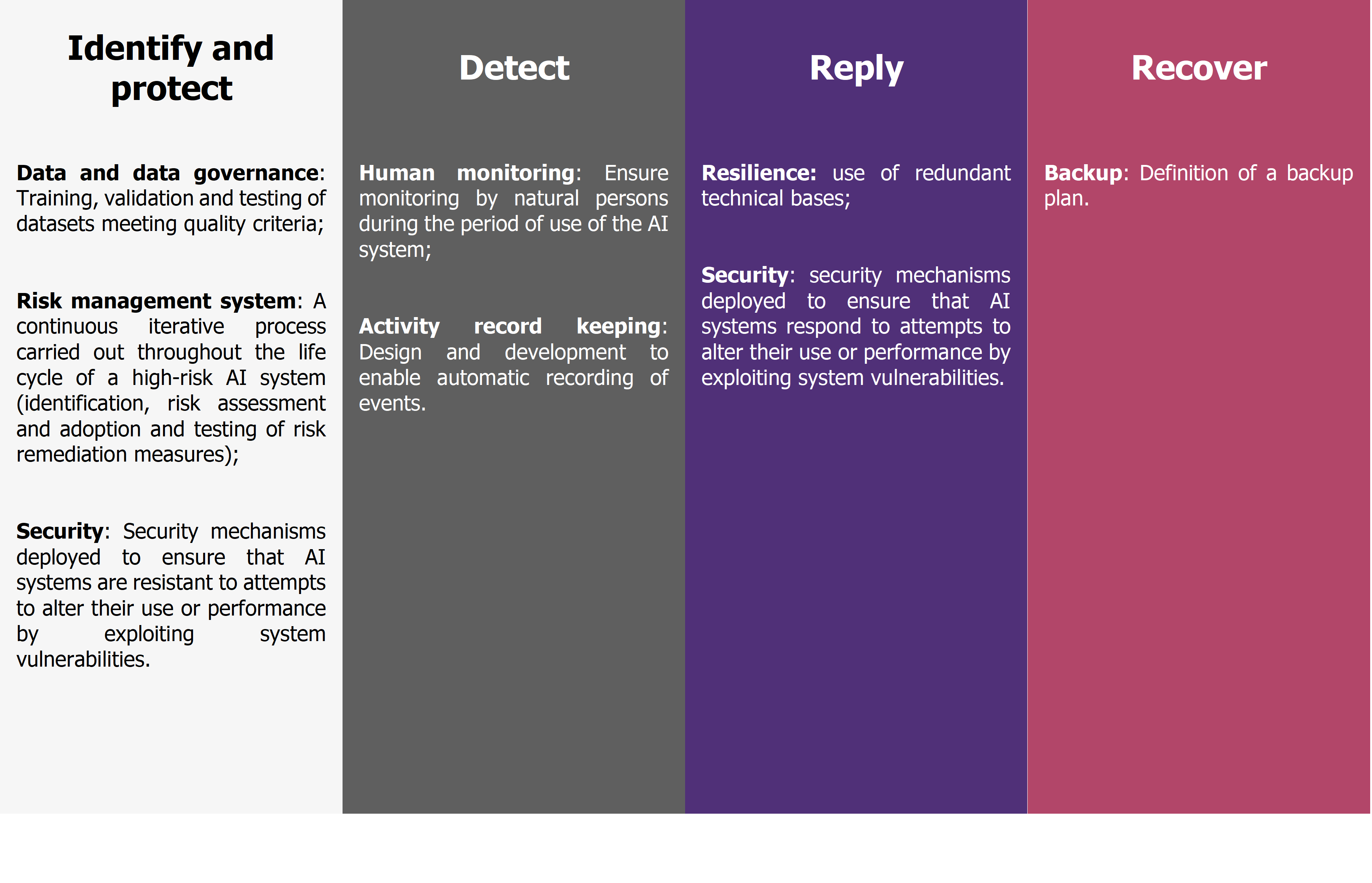

Numerous cyber requirements exist to protect the assets support applications using Machine Learning (ML) throughout the project lifecycle. On a macroscopic scale, these requirements can be categorised into the classic cybersecurity pillars[6] extracted from the NIST Framework[7] :

Figure 5 Cybersecurity pillars

The following diagram shows different texts with their cyber components:

Figure 6 Cyber specificities of some important texts

In general, if we cross-reference the results of the Figure 6 with those of the study of all the texts, it appears that three requirements are particularly addressed:

- Analyse the risks on ML systems considering their specificities, to identify both “classical” and ML-specific security measures. To do this, the following steps should generally be followed:

- Understand the interests of attackers in attacking the ML system.

- Identify the sensitivity of the data handled in the life cycle of the ML system (e.g., personal, medical, military etc.).

- Framing the legal and intellectual property rights requirements (who owns the model and the data manipulated in the case of cloud hosting for example).

- Understand where the different supporting assets of applications using Machine Learning are hosted throughout the life cycle of the Machine Learning system. For example, some applications may be hosted in the cloud, other on-premises. The cyber risk strategy should be adjusted accordingly (management of service providers, different flows etc.).

- Understand the architecture and exposure of the model. Some models are more exposed than others to Machine Learning-specific attacks. For example, some models are publicly exposed and thus may be subject to a thorough reconnaissance phase by an attacker (e.g. by dragging inputs and observing outputs).

- Include specific attacks on Machine Learning algorithms. There are three main types of attack: evasion attacks (which target integrity), oracle attacks (which target confidentiality) and poisoning attacks (which target integrity and availability).

- Track and monitor actions. This includes at least two levels:

- Traceability (log of actions) to allow monitoring of access to resources used by the ML system.

- More “business” detection rules to check that the system is still performing and possibly detect if an attack is underway on it.

- Have data governance. As explained in Annex I : Machine Learning, data is the raw material of ML systems. Therefore, a set of measures should be taken to protect it such as:

- Ensure integrity throughout the entire data life cycle.

- Secure access to data.

- Ensure the quality of the data collected.

It is likely that these points will be present in the first published regulations.

The AI Act: will Europe take the lead as with the RGPD?

In the context of this study, we looked more closely at what has been done in the European Union and one text caught our attention.

The claim that there is no legislation yet is only partly true. In 2021, the European Commission published the AI Act [8] : a legislative proposal that aims to address the risks associated with certain uses of AI. Its objectives, to quote the document, are to:

- Ensure that AI systems placed on the EU market and used are safe and respect existing fundamental rights legislation and EU values.

- Ensuring legal certainty to facilitate investment and innovation in AI.

- Strengthen governance and effective enforcement of existing legislation on fundamental rights and security requirements for AI systems.

- Facilitate the development of a single market for legal, safe, and trustworthy AI applications and prevent market fragmentation.

The AI Act is in line with the texts listed above. It adopts a risk-based approach with requirements that depend on the risk levels of AI systems. The regulation thus defines four levels of risk:

- AI systems with unacceptable risks.

- AI systems with high risks.

- AI systems with specific risks.

- AI systems with minimal risks.

Each of these levels is the subject of an article in the legislative proposal to define them precisely and to construct the associated regulation.

Figure 7 The risk hierarchy in the IA Act[9]

For high-risk AI systems, the AI Act proposes cyber requirements along the lines of those presented above. For example, if we use the NIST-inspired categorization presented in Figure 5 The AI Act proposes the following requirements:

Even if the text is only a proposal (it may be adopted within 1 to 5 years), we note that the European Union is taking the lead by proposing a bold regulation to accompany the development of AI, as it is with personal data and the RGPD.

What future for AI regulation and cybersecurity?

In recent years, numerous texts on the regulation of AI systems have been published. Although there is no legislation to date, the pressure is mounting with numerous texts, such as the AI Act, a European Union proposal, being published. These proposals provide requirements in terms of AI development strategy, ethics and cyber security. For the latter, the requirements mainly concern topics such as cyber risk management, monitoring, governance and data protection. Moreover, it is likely that the first regulations will propose a risk-based approach with requirements adapted according to the level of risk.

In view of its analysis of the situation, Wavestone can only encourage the development of an approach such as that proposed by the AI Act by adopting a risk-based methodology. This means identifying the risks posed by projects and implementing appropriate security measures. This would allow us to get started and avoid having to comply with the law after the fact.

Annex I: Machine Learning

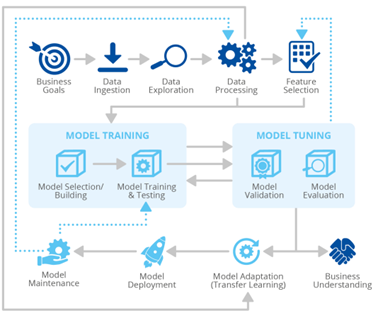

Machine Learning (ML) is defined as the opportunity for systems[10] to learn to solve a task using data without being explicitly programmed to do so. Heuristically, an ML system learns to give an “adequate output”, e.g. does a scanner image show a tumour, from input data (i.e. the scanner image in our example).

To quote ENISA[11] , the specific features on which Machine Learning is based are the following:

- The data. It is at the heart of Machine Learning. Data is the raw material consumed by ML systems to learn to solve a task and then to perform it once in production.

- A model. That is, a mathematical and algorithmic model that can be seen as a box with a large set of adjustable parameters used to give an output from input data. In a phase called learning, the model uses data to learn how to solve a task by automatically adjusting its parameters, and then once in production it will be able to complete the task using the adjusted parameters.

- Specific processes. These specific processes address the entire life cycle of the ML system. They concern, for example, the data (processing the data to make it usable, for example) or the parameterisation of the model itself (how the model adjusts its parameters based on the data it uses).

- Development tools and environments. For example, many models are trained and then stored directly on cloud platforms as they require a lot of resources to perform the model calculations.

- Notably because new jobs have been created with the rise of Machine Learning, such as the famous Data Scientists.

Generally, the life cycle of a Machine Learning project can be broken down into the following stages:

Figure 8 Life cycle of a Machine Learning project[12]

Annex 2 Non-exhaustive list of texts relating to AI and the framework for its development

|

Country or international entities |

Title of the document[13] |

Published by |

Date of publication |

|

France |

Making sense of AI: for a national and European strategy |

Cédric Villani |

March 2018 |

|

National AI Research Strategy |

Ministry of Higher Education, Research and Innovation, Ministry of Economy and Finance, General Directorate of Enterprises, Ministry of Health, Ministry of the Armed Forces, INRIA, DINSIC |

November 2018 |

|

|

Algorithms: preventing the automation of discrimination |

Defenders of rights – CNIL |

May 2020 |

|

|

AI safety |

CNIL |

April 2022 |

|

|

Europe |

Artificial Intelligence for Europe |

European Commission |

April 2018 |

|

Ethical Guidelines for Trustworthy AI |

High-level freelancers on artificial intelligence |

April 2019 |

|

|

Building confidence in human-centred artificial intelligence |

European Commission |

April 2019 |

|

|

Policy and Investment Recommendations for Trustworthy AI |

High-level freelancers on artificial intelligence |

June 2019 |

|

|

White Paper – AI: a European approach based on excellence and trust |

European Commission |

February 2020 |

|

|

AI Act |

European Commission |

April 2021 |

|

|

Securing Machine Learning Algorithms |

ENISA |

November 2021 |

|

|

Belgium |

AI 4 Belgium |

AI 4 Belgium Coalition |

March 2019 |

|

Luxembourg |

Artificial intelligence: a strategic vision for Luxembourg |

Digital Luxembourg, Government of the Grand Duchy of Luxembourg |

May 2019 |

|

United States |

A Vision for Safety 2.0: Automated Driving Systems |

Department of Transportation |

August 2017 |

|

Preparing for the Future of Transportation: Automated Vehicles 3.0 |

Department of Transportation |

October 2018 |

|

|

The AIM Initiative: A Strategy for Augmenting Intelligence Using Machines |

Department of Defense |

January 2019 |

|

|

Summary of the 2018 Department of Defense Artificial Intelligence Strategy: Harnessing AI to Advance our Security and Prosperity |

Department of Defense |

February 2019 |

|

|

The National Artificial Intelligence Research and Development Strategic Plan: 2019 Update |

National Science & Technology Council |

June 2019 |

|

|

A Plan for Federal Engagement in Developing Technical Standards and Related Tools |

NIST (National Institute of Standards and Technology) |

August 2019 |

|

|

Ensuring American Leadership in Automated Vehicle Technologies: Automated Vehicles 4.0 |

Department of Transportation |

January 2020 |

|

|

Aiming for truth, fairness, and equity in your company’s use of AI |

Federal trade commission |

April 2021 |

|

|

AI Risk Management framework: Initial Draft |

NIST |

March 2022 |

|

|

United Kingdom |

AI Sector Deal |

Department for Business, Energy & Industrial Strategy; Department for Digital, Culture, Media & Sport |

May 2018 |

|

Data Ethics Framework |

Department for Digital, Culture Media & Sport |

June 2018 |

|

|

Intelligent security tools: Assessing intelligent tools for cyber security |

National Cyber Security Center |

April 2019 |

|

|

Understanding Artificial Intelligence Ethics and Safety |

The Alan Turing Institute |

June 2019 |

|

|

Guidelines for AI Procurement |

Office for Artificial Intelligence |

June 2020 |

|

|

A guide to using artificial intelligence in the public sector |

Office for Artificial Intelligence |

January 2020 |

|

|

AI Roadmap |

UK AI Council |

January 2021 |

|

|

National AI Strategy |

HM Government |

September 2021 |

|

|

Hong Kong |

High-level Principles on Artificial Intelligence |

Hong Kong Monetary Authority |

November 2019 |

|

Reshaping banking witth Artificial Intelligence |

Hong Kong Monetary Authority |

December 2019 |

|

|

OECD |

Recommendation of the Council on Artificial Intelligence |

OECD |

May 2019 |

|

United Nations |

System-wide Approach and Road map for Supporting Capacity Development on AI |

UN System Chief Executives Board for Coordination |

June 2019 |

|

Brazil |

Brazilian Legal Framework for Artificial Intelligence |

Brazilian congress |

September 2021 |

[1] Summer school that brought together scientists such as the famous John McCarthy. However, the origins of AI can be attributed to different researchers. For example, in the literature, names like the computer scientist Alan Turing can also be found.

[2] For example, Amazon was accused in October 2021 of not complying with Article 22 of the GDPR. For more information: https://www.usine-digitale.fr/article/le-fonctionnement-de-l-algorithme-de-paiement-differe-d-amazon-violerait-le-rgpd.N1154412

[3] AI does not escape certain laws and regulations such as the RGPD for the countries concerned. We note for example this text from the CNIL: https://www.cnil.fr/fr/intelligence-artificielle/ia-comment-etre-en-conformite-avec-le-rgpd.

[4] Except for legislative proposals as we shall see later for the European Union. The case of Brazil is not treated in this article.

[5] This list is not exhaustive. The figures given give orders of magnitude on the main publishers of texts on the development of AI.

The texts on which the study is based are available in Annex 2 page 9

[6] We have chosen to merge the identification and protection phase for the purposes of this article.

[7] National Institute of Standards and Technology (NIST), Framework for improving Critical Infrastructure Cybersecurity, 16 April 2018, available at https://www.nist.gov/cyberframework/framework

[8] Available at: https://artificialintelligenceact.eu/the-act/

[9] Loosely based on : Eve Gaumond, Artificial Intelligence Act: What is the European Approach for AI? in Lawfare, June 2021, available at: https://www.lawfareblog.com/artificial-intelligence-act-what-european-approach-ai

[10] We talk about systems so as not to reduce AI.

[11] https://www.enisa.europa.eu/publications/artificial-intelligence-cybersecurity-challenges

[12] https://www.enisa.europa.eu/publications/securing-machine-learning-algorithms

[13] Note that some titles have been translated in English.