As of now, it is interesting to note that it is the real attacks themselves that most easily allow us to quantify the cyber risks, and this by estimating the costs involved. It is estimated that NotPetya, the famous 1-billion-dollar malware, would have cost more than 300 million to many large companies, such as Fedex, Saint Gobain, … These estimates are still very approximate and are generally possible only several months after an attack. So, how can we anticipate the risks related to cyberattacks? How to reliably quantify this risk?

Lately, strong attention is being paid to risk quantification, and rightly so. However, it remains a very complex topic. There are two obvious reasons for this: we are sorely lacking in precise information and feedback; but also because cyberattacks generate many intangible impacts (reputation, internal disorganization, strategic damage, shutdown of operations); or indirect costs (drop in sales, contractual penalties, drop in the company’s market value, etc.).

We can see promising avenues for quantifying risk, and solutions able to automate this quantification are been released.

Why cyber risk should be quantified?

Whether it is for communicating with senior management, business units, or even insurers, there is a real need to assess cyber risks as objectively as possible. The challenge is twofold: to gain relevance and legitimacy. One way forward is to treat cyber risk through a financial prism, like all other business risks, to make them meaningful to decision-makers.

One of the real challenges in quantifying cyber risks lies in building trust with executive committees over the long term. The first step is to adopt a clear posture to convince them and secure the investments needed to launch structuring security programs. Then, it should help proving the effectiveness of the investments made, and thus sustain the relationship with the executive committees over time, through the demonstration of the risk reduction in a quantified way and the evolution of risk over several years. This is key, particularly in the wake of the COVID crisis, as it will lead to a reduction and optimization of cyber security budgets within companies. It will therefore be essential to quantify the cyber risk for a stronger control on the ROI of cyber security investments.

The process of securing a company’s information system cannot be carried out without the implementation of Security by Design. Hence, it cannot be carried out without involving the business units. Speaking the same language is therefore necessary.

Finally, in order not to find themselves at the foot of the wall in the event of an attack, it is essential for companies to anticipate the potential costs of an attack in order to adapt provisions and insurance. This quantification allows them to do this.

What are the main difficulties encountered?

Given their intangible nature, it seems complex to objectively assess the impacts of cyberattacks. This is the case, for example, of the impact on a company’s image and reputation, or strategic damage and internal disorganization. Other risks are indeed tangible but indirect, which further complicates the task of companies that wish to quantify their risks, for example a loss of market share, a drop in the company’s market value, etc.

There is no universal formula for calculating the impact of an attack on a company. It depends on several parameters: the size of the company, the level of complexity and openness of the information system, the cyber maturity, etc. A company’s level of exposure depends essentially on its level of cyber security maturity. There are frameworks such as NIST, ISO, CIS, etc. for estimating the level of maturity in cyber security, but few companies manage to implement them or use them at their full extent.

Companies willing to quantify their cyber risks are faced with a lack of statistical databases on the cost of cyberattacks. Of course, most companies communicate little or nothing about it, probably to avoid scaring their customers and partners. And yet, collaboration would be key in the face of increasingly clever attackers: both to increase their cyber-resilience and to facilitate risk quantification. For example, Altran and Norsk Hydro have been affected by similar ransomwares from the same group of attackers!

Some first clues for quantifying cyber risk

IMF President Christine Lagarde has already taken up the issue and published a bill and a methodology for quantifying cyber risks in the banking sector, used within the IMF. So how can we extend quantification to other sectors?

Prerequisites for optimal risk quantification

The FAIR methodology is one of the most widely used to quantify risks. Effective risk quantification induces:

- A good knowledge of its most critical risks. Indeed, given the complexity of FAIR, it is better not to spread out and focus on the most important risk scenarios. You still have to know them! A risk mapping exercise is to be expected, in which the mobilization of the business units will be needed;

- A good understanding of existing security measures to ensure their ability to resist attacks and to estimate the residual impacts;

- A first draft of a repository of typical costs (legal fees, communications fees, etc.), which will be completed over time, and which requires business expertise to identify and estimate costs.

Also, estimating the cost of risk, due to its cross-functional nature, calls for the collaboration of many stakeholders in the company (HR, legal, etc.), which can be complex to set up.

The FAIR methodology, an approach that specifies certain phases of risk analysis and treatment

Introduction to the FAIR (Factor Analysis of Information Risk) methodology

In 2001, Jack Jones was the CISO for Nationwide Insurance. He was confronted with persistent questions from his senior management asking for figures on the risks to which the company was exposed. Faced with the dissatisfaction caused by the vagueness of his answers, Jack Jones set up a methodology to estimate, in a quantified way, the risks weighing on his business: the FAIR methodology.

Concretely, how does this differ from a risk analysis methodology, such as EBIOS in France?

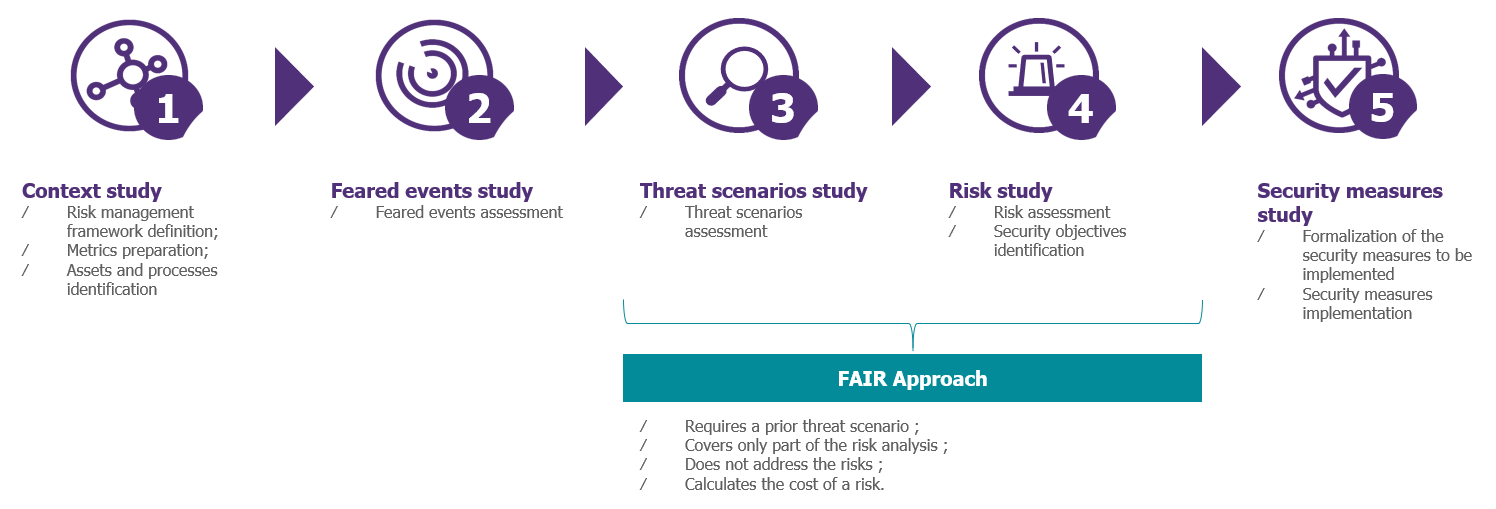

The FAIR methodology is not a substitute for risk analysis: FAIR is a methodology for assessing the impacts and probabilities of a risk more reliably. The impacts are always translated into financial terms in order to make the evaluation tangible. The contributions made are illustrated in the diagram below.

Diagram 1: FAIR, an approach that specifies certain phases of risk analysis and treatment

Usually, cyber risk assessment results in several types of impact (image, financial, operational, legal, etc.). The particularity of the FAIR methodology is to transpose each impact to a financial cost (direct, indirect, tangible and intangible costs). For example, if a risk scenario has an impact on the company’s image, FAIR translates this risk into a financial risk by evaluating the cost of the communication agency that will be mobilized to improve the company’s image. If a company’s CEO is mobilized as part of crisis management, then it will be necessary to estimate the time spent managing this crisis and monetize it.

How to apply the FAIR methodology?

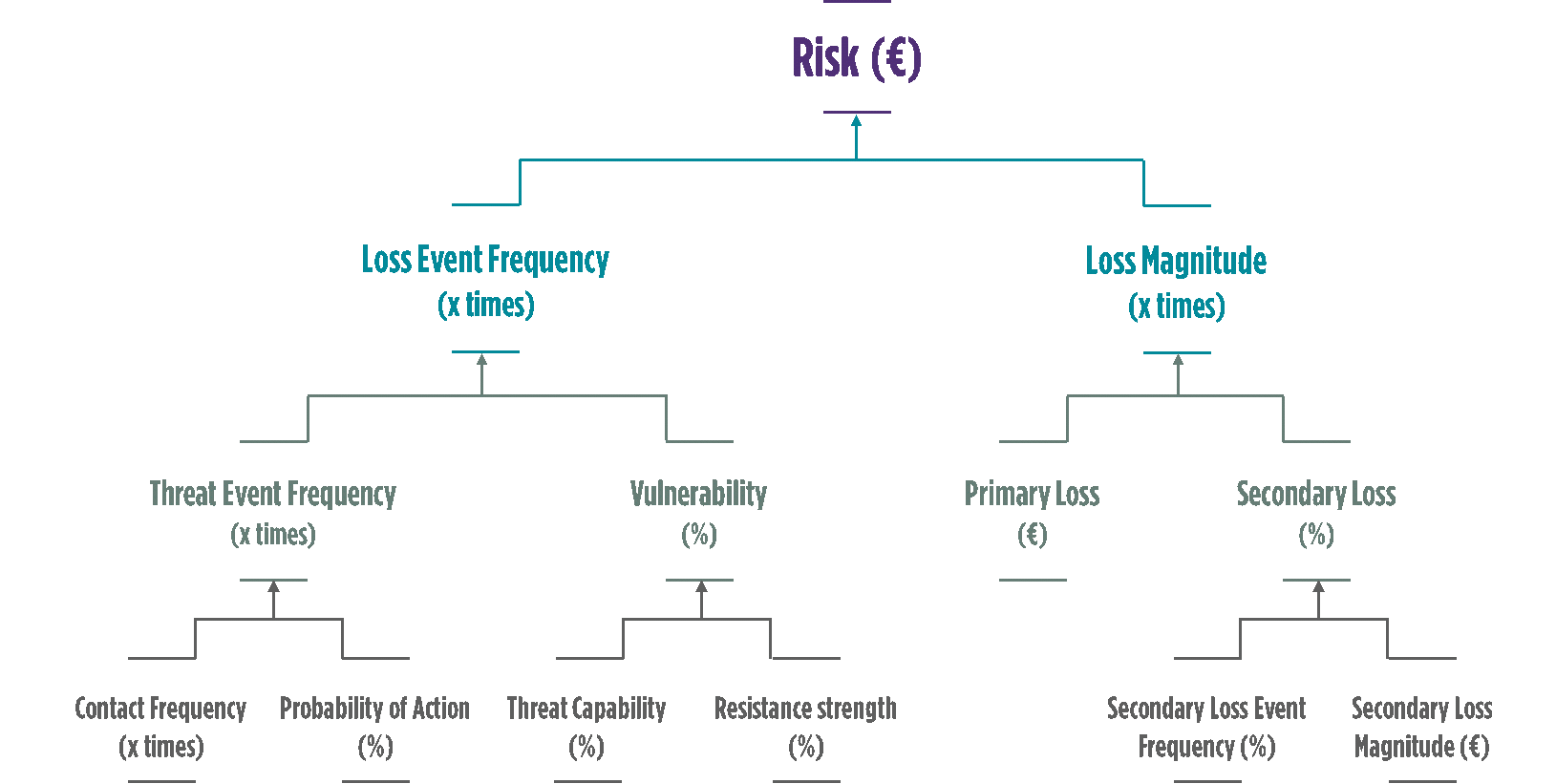

A risk quantified in euros is the factor of the frequency of successful attack (loss event frequency) and the cost of the successful attack (loss magnitude). The diagram below shows the approach used by the FAIR methodology to estimate these two characteristics.

Diagram 2: the criteria taken into account by the FAIR methodology to estimate risks

- « Loss Event Frequency » computation

The “contact frequency” represents the frequency at which the threat agent meets the asset to be protected. For example, it may be the frequency at which a natural disaster occurs at a given location.

The “probability of action” is the likelihood that the threat will maliciously act on the system once contact is made. This applies only when the threat agent is a living being (does not apply in the case of a tornado, for example). This is deducted from the gain, effort and cost of the attack and the risks.

The “threat event frequency” is derived from these two parameters.

The “threat capability” consists of estimating the capabilities of the threat agent both in terms of skills (experience and knowledge) and resources (time and materials).

The “resistance strength” is the company’s ability to withstand this attack scenario. The resistance threat is calculated based on the level of cyber maturity of the entity, for example with a gap analysis at NIST.

From these two parameters come the “vulnerability” and the “loss event frequency”.

- « Loss Magnitude » computation

“Primary losses” are the cost of direct losses. This includes: interruption of operations, salaries paid to employees while operations are interrupted, cost of mobilizing service providers to mitigate the attack (restoring systems, conducting investigations), etc.

“Secondary losses” are indirect losses, resulting from the reactions of other people affected, and are more difficult to estimate. For example, secondary loss can cover the loss of market share caused by the deterioration of the company’s image, the costs of notifying an attack through a communication agency, the payment of a fine to a regulator or even legal fees, etc. This is calculated by multiplying the “secondary loss event frequency” and the “secondary loss magnitude” for each of the indirect costs.

A solution that accompanies companies in the implementation of this methodology

Beyond the theoretical description of the methodology, solutions are being developed to enable companies to apply the methodology in a concrete way. This is the case of the French start-up Citalid, for example, which offers a platform for quantifying cyber risks based on the FAIR methodology. This enables the CISO to refine and make the quantification of risks consistent thanks to threat intelligence (for monitoring attackers over time). To use the solution, the company must fill in elements relating to its context and, for each of the risk scenarios to be quantified, complete a NIST questionnaire (50 questions for the most basic or 250 for a finer level of granularity) and the rest is calculated automatically.

What are the advantages and limitations of the FAIR methodology?

The FAIR methodology mainly provides the following elements:

- It allows the company to identify and evaluate more precisely the most important risks. For each of the selected risk scenarios, the methodology allows an estimate of average and maximum financial losses and an estimated frequency. For example: “the probability of losing 150 million euros due to the propagation of a destructive NotPetya type ransomware exploiting a 0-day Windows flaw is 20%”.

- It allows to estimate the cost-benefit of the risk reduction action plan. By playing with “resistence strength”, it is possible to estimate the return on investment (ROI) of the security measures to be put in place.

- It transposes all cyber risks into a financial risk which allows a better understanding of the risk by the company’s managers.

However, the FAIR application is not without constraints because it requires resources that are sometimes significant (both in terms of man-days and knowledge of the company’s context). Moreover, risk quantification only covers a limited scope (1 risk scenario). Also, risk quantification using the FAIR methodology needs to be refined with standard cost charts associated with a cyber impact. This can be done, for example, by capitalizing on post-mortem analyses of a cyber crisis, which can often provide a real illustration of the financial impacts.

Thus, the FAIR methodology is a promising approach that still needs to be fully understood and adapted to companies’ context in order to derive concrete benefits.